1 Notations and data

1.1 Notations

This section aims at providing the formal mathematical conventions that will be used throughout the book.

Bold notations indicate vectors and matrices. We use capital letters for matrices and lower case letters for vectors. \(\mathbf{v}'\) and \(\mathbf{M}'\) denote the transposes of \(\mathbf{v}\) and \(\mathbf{M}\). \(\mathbf{M}=[m]_{i,j}\), where \(i\) is the row index and \(j\) the column index.

We will work with two notations in parallel. The first one is the pure machine learning notation in which the labels (also called output, dependent variables or predicted variables) \(\mathbf{y}=y_i\) are approximated by functions of features \(\mathbf{X}_i=(x_{i,1},\dots,x_{i,K})\). The dimension of the feature matrix \(\mathbf{X}\) is \(I\times K\): there are \(I\) instances, records, or observations and each one of them has \(K\) attributes, features, inputs, or predictors which will serve as independent and explanatory variables (all these terms will be used interchangeably). Sometimes, to ease notations, we will write \(\textbf{x}_i\) for one instance (one row) of \(\textbf{X}\) or \(\textbf{x}_k\) for one (feature) column vector of \(\textbf{X}\).

The second notation type pertains to finance and will directly relate to the first. We will often work with discrete returns \(r_{t,n}=p_{t,n}/p_{t-1,n}-1\) computed from price data. Here \(t\) is the time index and \(n\) the asset index. Unless specified otherwise, the return is always computed over one period, though this period can sometimes be one month or one year. Whenever confusion might occur, we will specify other notations for returns.

In line with our previous conventions, the number of return dates will be \(T\) and the number of assets, \(N\). The features or characteristics of assets will be denoted with \(x_{t,n}^{(k)}\): it is the time-\(t\) value of the \(k^{th}\) attribute of firm or asset \(n\). In stacked notation, \(\mathbf{x}_{t,n}\) will stand for the vector of characteristics of asset \(n\) at time \(t\). Moreover, \(\mathbf{r}_t\) stands for all returns at time \(t\) while \(\mathbf{r}_n\) stands for all returns of asset \(n\). Often, returns will play the role of the dependent variable, or label (in ML terms). For the riskless asset, we will use the notation \(r_{t,f}\).

The link between the two notations will most of the time be the following. One instance (or observation) \(i\) will consist of one couple (\(t,n\)) of one particular date and one particular firm (if the data is perfectly rectangular with no missing field, \(I=T\times N\)). The label will usually be some performance measure of the firm computed over some future period, while the features will consist of the firm attributes at time \(t\). Hence, the purpose of the machine learning engine in factor investing will be to determine the model that maps the time-\(t\) characteristics of firms to their future performance.

In terms of canonical matrices: \(\mathbf{I}_N\) will denote the \((N\times N)\) identity matrix.

From the probabilistic literature, we employ the expectation operator \(\mathbb{E}[\cdot]\) and the conditional expectation \(\mathbb{E}_t[\cdot]\), where the corresponding filtration \(\mathcal{F}_t\) corresponds to all information available at time \(t\). More precisely, \(\mathbb{E}_t[\cdot]=\mathbb{E}[\cdot | \mathcal{F}_t]\). \(\mathbb{V}[\cdot]\) will denote the variance operator. Depending on the context, probabilities will be written simply \(P\), but sometimes we will use the heavier notation \(\mathbb{P}\). Probability density functions (pdfs) will be denoted with lowercase letters (\(f\)) and cumulative distribution functions (cdfs) with uppercase letters (\(F\)). We will write equality in distribution as \(X \overset{d}{=}Y\), which is equivalent to \(F_X(z)=F_Y(z)\) for all \(z\) on the support of the variables. For a random process \(X_t\), we say that it is stationary if the law of \(X_t\) is constant through time, i.e., \(X_t\overset{d}{=}X_s\), where \(\overset{d}{=}\) means equality in distribution.

Sometimes, asymptotic behaviors will be characterized with the usual Landau notation \(o(\cdot)\) and \(O(\cdot)\). The symbol \(\propto\) refers to proportionality: \(x\propto y\) means that \(x\) is proportional to \(y\). With respect to derivatives, we use the standard notation \(\frac{\partial}{\partial x}\) when differentiating with respect to \(x\). We resort to the compact symbol \(\nabla\) when all derivatives are computed (gradient vector).

In equations, the left-hand side and right-hand side can be written more compactly: l.h.s. and r.h.s., respectively.

Finally, we turn to functions. We list a few below:

- \(1_{\{x \}}\): the indicator function of the condition \(x\), which is equal to one if \(x\) is true and to zero otherwise.

- \(\phi(\cdot)\) and \(\Phi(\cdot)\) are the standard Gaussian pdf and cdf.

- card\((\cdot)=\#(\cdot)\) are two notations for the cardinal function which evaluates the number of elements in a given set (provided as argument of the function).

- \(\lfloor \cdot \rfloor\) is the integer part function.

- for a real number \(x\), \([x]^+\) is the positive part of \(x\), that is \(\max(0,x)\).

- tanh\((\cdot)\) is the hyperbolic tangent: tanh\((x)=\frac{e^x-e^{-x}}{e^x+e^{-x}}\).

- ReLu\((\cdot)\) is the rectified linear unit: ReLu\((x)=\max(0,x)\).

- s\((\cdot)\) will be the softmax function: \(s(\textbf{x})_i=\frac{e^{x_i}}{\sum_{j=1}^Je^{x_j}}\), where the subscript \(i\) refers to the \(i^{th}\) element of the vector.

1.2 Dataset

Throughout the book, and for the sake of reproducibility, we will illustrate the concepts we present with examples of implementation based on a single financial dataset available at https://github.com/shokru/mlfactor.github.io/tree/master/material. This dataset comprises information on 1,207 stocks listed in the US (possibly originating from Canada or Mexico). The time range starts in November 1998 and ends in March 2019. For each point in time, 93 characteristics describe the firms in the sample. These attributes cover a wide range of topics:

-

valuation (earning yields, accounting ratios);

-

profitability and quality (return on equity);

-

momentum and technical analysis (past returns, relative strength index);

-

risk (volatilities);

-

estimates (earnings-per-share);

- volume and liquidity (share turnover).

The sample is not perfectly rectangular: there are no missing points, but the number of firms and their attributes is not constant through time. This makes the computations in the backtest more tricky, but also more realistic.

library(tidyverse) # Activate the data science package

library(lubridate) # Activate the date management package

load("data_ml.RData") # Load the data

data_ml <- data_ml %>%

filter(date > "1999-12-31", # Keep the date with sufficient data points

date < "2019-01-01") %>%

arrange(stock_id, date) # Order the data

data_ml[1:6, 1:6] # Sample values## # A tibble: 6 × 6

## stock_id date Advt_12M_Usd Advt_3M_Usd Advt_6M_Usd Asset_Turnover

## <int> <date> <dbl> <dbl> <dbl> <dbl>

## 1 1 2000-01-31 0.41 0.39 0.42 0.19

## 2 1 2000-02-29 0.41 0.39 0.4 0.19

## 3 1 2000-03-31 0.4 0.37 0.37 0.2

## 4 1 2000-04-30 0.39 0.36 0.37 0.2

## 5 1 2000-05-31 0.4 0.42 0.4 0.2

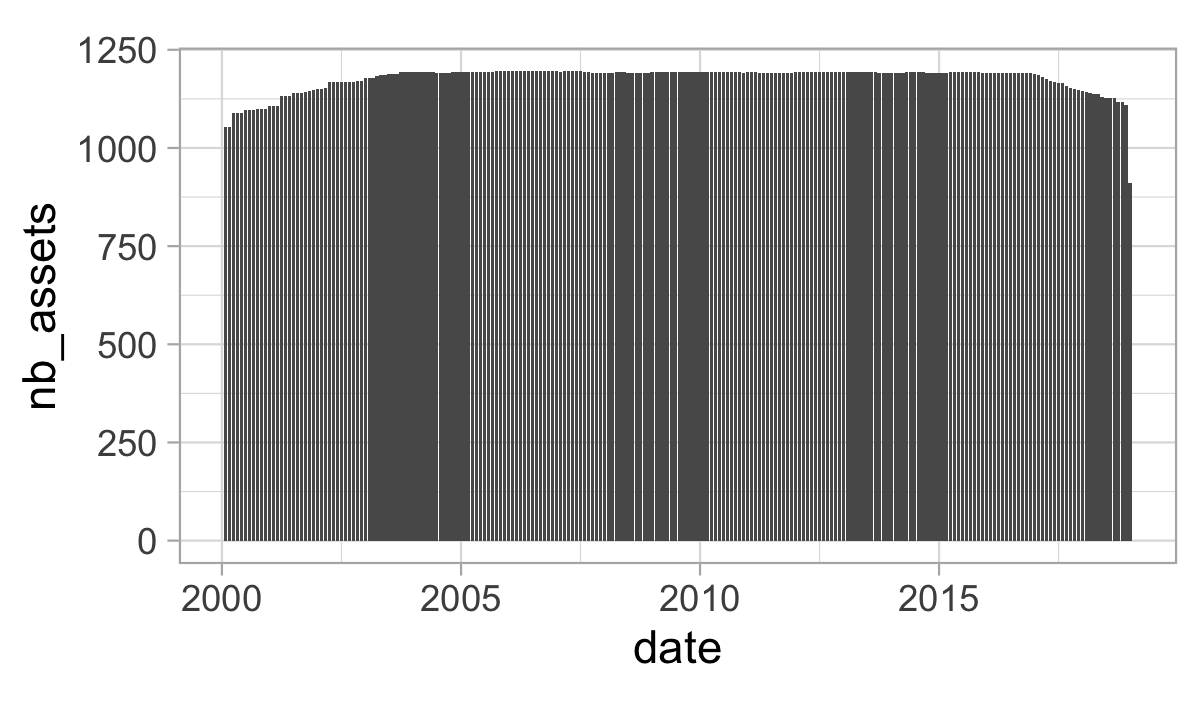

## 6 1 2000-06-30 0.41 0.47 0.42 0.21The data has 99 columns and 268336 rows. The first two columns indicate the stock identifier and the date. The next 93 columns are the features (see Table 17.1 in the Appendix for details). The last four columns are the labels. The points are sampled at the monthly frequency. As is always the case in practice, the number of assets changes with time, as is shown in Figure 1.1.

data_ml %>%

group_by(date) %>% # Group by date

summarize(nb_assets = stock_id %>% # Count nb assets

as.factor() %>% nlevels()) %>%

ggplot(aes(x = date, y = nb_assets)) + geom_col() + # Plot

theme_light() + coord_fixed(3)

FIGURE 1.1: Number of assets through time.

There are four immediate labels in the dataset: R1M_Usd, R3M_Usd, R6M_Usd and R12M_Usd, which correspond to the 1-month, 3-month, 6-month and 12-month future/forward returns of the stocks. The returns are total returns, that is, they incorporate potential dividend payments over the considered periods. This is a better proxy of financial gain compared to price returns only. We refer to the analysis of Hartzmark and Solomon (2019) for a study on the impact of decoupling price returns and dividends. These labels are located in the last 4 columns of the dataset. We provide their descriptive statistics below.

## # A tibble: 4 × 5

## Label mean sd min max

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 R12M_Usd 0.137 0.738 -0.991 96.0

## 2 R1M_Usd 0.0127 0.176 -0.922 30.2

## 3 R3M_Usd 0.0369 0.328 -0.929 39.4

## 4 R6M_Usd 0.0723 0.527 -0.98 107.In anticipation for future models, we keep the name of the predictors in memory. In addition, we also keep a much shorter list of predictors.

features <- colnames(data_ml[3:95]) # Keep the feature's column names (hard-coded, beware!)

features_short <- c("Div_Yld", "Eps", "Mkt_Cap_12M_Usd", "Mom_11M_Usd",

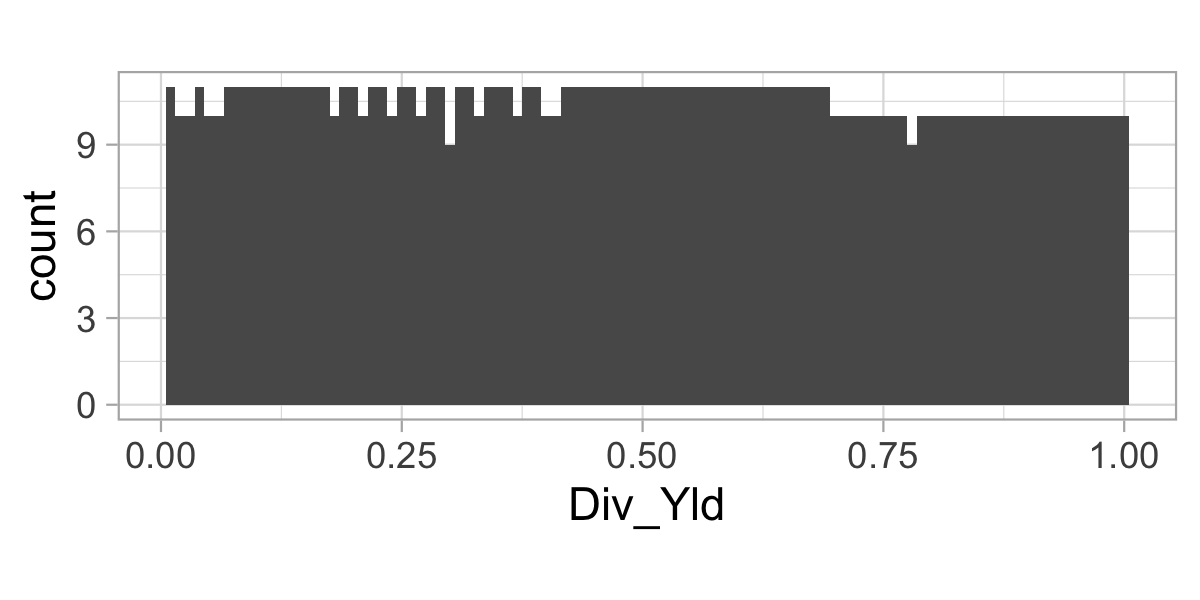

"Ocf", "Pb", "Vol1Y_Usd")The predictors have been uniformized, that is, for any given feature and time point, the distribution is uniform. Given 1,207 stocks, the graph below cannot display a perfect rectangle.

data_ml %>%

filter(date == "2000-02-29") %>%

ggplot(aes(x = Div_Yld)) + geom_histogram(bins = 100) +

theme_light() + coord_fixed(0.03)

FIGURE 1.2: Distribution of the dividend yield feature on date 2000-02-29.

The original labels (future returns) are numerical and will be used for regression exercises, that is, when the objective is to predict a scalar real number. Sometimes, the exercises can be different and the purpose may be to forecast categories (also called classes), like “buy”, “hold” or “sell”. In order to be able to perform this type of classification analysis, we create additional labels that are categorical.

data_ml <- data_ml %>%

group_by(date) %>% # Group by date

mutate(R1M_Usd_C = R1M_Usd > median(R1M_Usd), # Create the categorical labels

R12M_Usd_C = R12M_Usd > median(R12M_Usd)) %>%

ungroup() %>%

mutate_if(is.logical, as.factor)The new labels are binary: they are equal to 1 (true) if the original return is above that of the median return over the considered period and to 0 (false) if not. Hence, at each point in time, half of the sample has a label equal to zero and the other half to one: some stocks overperform and others underperform.

In machine learning, models are estimated on one portion of data (training set) and then tested on another portion of the data (testing set) to assess their quality. We split our sample accordingly.

separation_date <- as.Date("2014-01-15")

training_sample <- filter(data_ml, date < separation_date)

testing_sample <- filter(data_ml, date >= separation_date)We also keep in memory a few key variables, like the list of asset identifiers and a rectangular version of returns. For simplicity, in the computation of the latter, we shrink the investment universe to keep only the stocks for which we have the maximum number of points.

stock_ids <- levels(as.factor(data_ml$stock_id)) # A list of all stock_ids

stock_days <- data_ml %>% # Compute the number of data points per stock

group_by(stock_id) %>% summarize(nb = n())

stock_ids_short <- stock_ids[which(stock_days$nb == max(stock_days$nb))] # Stocks with full data

returns <- data_ml %>% # Compute returns, in matrix format, in 3 steps:

filter(stock_id %in% stock_ids_short) %>% # 1. Filtering the data

dplyr::select(date, stock_id, R1M_Usd) %>% # 2. Keep returns along with dates & firm names

pivot_wider(names_from = "stock_id",

values_from = "R1M_Usd") # 3. Put in matrix shape