16 Reinforcement learning

Due to its increasing popularity within the Machine Learning community, we dedicate a chapter to reinforcement learning (RL). In 2019 only, more than 25 papers dedicated to RL have been submitted to (or updated on) arXiv under the q:fin (quantitative finance) classification. Applications to trading include Xiong et al. (2018) and Théate and Ernst (2020). Market microstructure is a focal framework (H. Wei et al. (2019), Ferreira (2020), Karpe et al. (2020)).

Moreover, an early survey of RL-based portfolios is compiled in Sato (2019) (see also Z. Zhang, Zohren, and Roberts (2020)) and general financial applications are discussed in Kolm and Ritter (2019b), Meng and Khushi (2019), Charpentier, Elie, and Remlinger (2020) and Mosavi et al. (2020). This shows again that RL has recently gained traction among the quantitative finance community.33

While RL is a framework much more than a particular algorithm, its efficient application in portfolio management is not straightforward, as we will show. For a discussion on the generalization ability of RL algorithms, we refer to Packer et al. (2018) and D. Ghosh et al. (2021).

16.1 Theoretical layout

16.1.1 General framework

In this section, we introduce the core concepts of RL and follow relatively closely the notations (and layout) of Sutton and Barto (2018), which is widely considered as a solid reference in the field, along with Bertsekas (2017). One central tool in the field is called the Markov Decision Process (MDP, see Chapter 3 in Sutton and Barto (2018)).

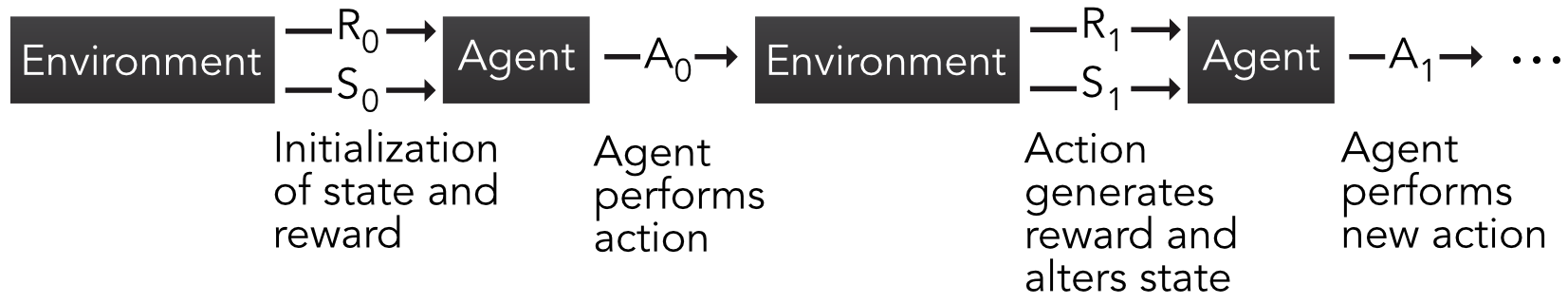

MDPs, like all RL frameworks, involve the interaction between an agent (e.g., a trader or portfolio manager) and an environment (e.g., a financial market). The agent performs actions that may alter the state of environment and gets a reward (possibly negative) for each action. This short sequence can be repeated an arbitrary number of times, as is shown in Figure 16.1.

FIGURE 16.1: Scheme of Markov Decision Process. R, S and A stand for reward, state and action, respectively.

Given initialized values for the state of the environment (\(S_0\)) and reward (usually \(R_0=0\)), the agent performs an action (e.g., invests in some assets). This generates a reward \(R_1\) (e.g., returns, profits, Sharpe ratio) and also a future state of the environment (\(S_1\)). Based on that, the agent performs a new action and the sequence continues. When the sets of states, actions and rewards are finite, the MDP is logically called finite. In a financial framework, this is somewhat unrealistic and we discuss this issue later on. It nevertheless is not hard to think of simplified and discretized financial problems. For instance, the reward can be binary: win money versus lose money. In the case of only one asset, the action can also be dual: investing versus not investing. When the number of assets is sufficiently small, it is possible to set fixed proportions that lead to a reasonable number of combinations of portfolio choices, etc.

We pursue our exposé with finite MDPs; they are the most common in the literature and their formal treatment is simpler. The relative simplicity of MDPs helps grasp the concepts that are common to other RL techniques. As is often the case with Markovian objects, the key notion is that of transition probability:

\[\begin{equation} \tag{16.1} p(s',r|s,a)=\mathbb{P}\left[S_t=s',R_t=r | S_{t-1}=s,A_{t-1}=a \right], \end{equation}\]

which is the probability of reaching state \(s'\) and reward \(r\) at time \(t\), conditionally on being in state \(s\) and performing action \(a\) at time \(t-1\). The finite sets of states and actions will be denoted with \(\mathcal{S}\) and \(\mathcal{A}\) henceforth. Sometimes, this probability is averaged over the set of rewards which gives the following decomposition: \[\begin{align} G_t&=\sum_{k=0}^T\gamma^kR_{t+k+1} \nonumber \\ \tag{16.3} &=R_{t+1} +\gamma G_{t+1}, \end{align}\]

i.e., it is a discounted version of the reward, where the discount factor is \(\gamma \in (0,1]\). The horizon \(T\) may be infinite, which is why \(\gamma\) was originally introduced. Assuming the rewards are bounded, the infinite sum may diverge for \(\gamma=1\). That is the case if rewards don’t decrease with time and there is no reason why they should. When \(\gamma <1\) and rewards are bounded, convergence is assured. When \(T\) is finite, the task is called episodic and, otherwise, it is said to be continuous.

In RL, the focal unknown to be optimized or learned is the policy \(\pi\), which drives the actions of the agent. More precisely, \(\pi(a,s)=\mathbb{P}[A_t=a|S_t=s]\), that is, \(\pi\) equals the probability of taking action \(a\) if the state of the environment is \(s\). This means that actions are subject to randomness, just like for mixed strategies in game theory. While this may seem disappointing because an investor would want to be sure to take the best action, it is also a good reminder that the best way to face random outcomes may well be to randomize actions as well.

Finally, in order to try to determine the best policy, one key indicator is the so-called value function: \[\begin{equation} \tag{16.4} v_\pi(s)=\mathbb{E}_\pi\left[ G_t | S_t=s \right], \end{equation}\]

where the time index \(t\) is not very relevant and omitted in the notation of the function. The index \(\pi\) under the expectation operator \(\mathbb{E}[\cdot]\) simply indicates that the average is taken when the policy \(\pi\) is enforced. The value function is simply equal to the average gain conditionally on the state being equal to \(s\). In financial terms, this is equivalent to the average profit if the agent takes actions driven by \(\pi\) when the market environment is \(s\). More generally, it is also possible to condition not only on the state, but also on the action taken. We thus introduce the \(q_\pi\) action-value function: \[\begin{equation} \tag{16.5} q_\pi(s,a)=\mathbb{E}_\pi\left[ G_t | S_t=s, \ A_t=a \right]. \end{equation}\]

The \(q_\pi\) function is highly important because it gives the average gain when the state and action are fixed. Hence, if the current state is known, then one obvious choice is to select the action for which \(q_\pi(s,\cdot)\) is the highest. Of course, this is the best solution if the optimal value of \(q_\pi\) is known, which is not always the case in practice. The value function can easily be accessed via \(q_\pi\): \(v_\pi(s)=\sum_a \pi(a,s)q_\pi(s,a)\).

The optimal \(v_\pi\) and \(q_\pi\) are straightforwardly defined as \[v_*(s)=\underset{\pi}{\max} \, v_\pi(s), \ \forall s\in \mathcal{S}, \quad \text{ and } \quad q_*(s,a) =\underset{\pi}{\max} \, q_\pi(s,a), \ \forall (s,a)\in \mathcal{S}\times \mathcal{A}.\]

If only \(v_*(s)\) is known, then the agent must span the set of actions and find those that yield the maximum value for any given state \(s\).

Finding these optimal values is a very complicated task and many articles are dedicated to solving this challenge. One reason why finding the best \(q_\pi(s,a)\) is difficult is because it depends on two elements (\(s\) and \(a\)) on one side and \(\pi\) on the other. Usually, for a fixed policy \(\pi\), it can be time consuming to evaluate \(q_\pi(s,a)\) for a given stream of actions, states and rewards. Once \(q_\pi(s,a)\) is estimated, then a new policy \(\pi'\) must be tested and evaluated to determine if it is better than the original one. Thus, this iterative search for a good policy can take long. For more details on policy improvement and value function updating, we recommend chapter 4 of Sutton and Barto (2018) which is dedicated to dynamic programming.

16.1.2 Q-learning

An interesting shortcut to the problem of finding \(v_*(s)\) and \(q_*(s,a)\) is to remove the dependence on the policy. Consequently, there is then of course no need to iteratively improve it. The central relationship that is required to do this is the so-called Bellman equation that is satisfied by \(q_\pi(s,a)\). We detail its derivation below. First of all, we recall that \[\begin{align*} q_\pi(s,a) &= \mathbb{E}_\pi[G_t|S_t=s,A_t=a] \\ &= \mathbb{E}_\pi[R_{t+1}+ \gamma G_{t+1}|S_t=s,A_t=a], \end{align*}\] where the second equality stems from (16.3). The expression \(\mathbb{E}_\pi[R_{t+1}|S_t=s,A_t=a]\) can be further decomposed. Since the expectation runs over \(\pi\), we need to sum over all possible actions \(a'\) and states \(s'\) and resort to \(\pi(a',s')\). In addition, the sum on the \(s'\) and \(r\) arguments of the probability \(p(s',r|s,a)=\mathbb{P}\left[S_{t+1}=s',R_{t+1}=r | S_t=s,A_t=a \right]\) gives access to the distribution of the random couple \((S_{t+1},R_{t+1})\) so that in the end \(\mathbb{E}_\pi[R_{t+1}|S_t=s,A_t=a]=\sum_{a', r,s'}\pi(a',s')p(s',r|s,a) r\). A similar reasoning applies to the second portion of \(q_\pi\) and: \[\begin{align} q_\pi(s,a) &=\sum_{a',r, s'}\pi(a',s')p(s',r|s,a) \left[ r+\gamma \mathbb{E}_\pi[ G_{t+1}|S_t=s',A_t=a']\right] \nonumber \\ \tag{16.6} &=\sum_{a',r,s'}\pi(a',s')p(s',r|s,a) \left[ r+\gamma q_\pi(s',a')\right]. \end{align}\]

This equation links \(q_\pi(s,a)\) to the future \(q_\pi(s',a')\) from the states and actions \((s',a')\) that are accessible from \((s,a)\).

Notably, Equation (16.6) is also true for the optimal action-value function \(q_*=\underset{\pi}{\max} \, q_\pi(s,a)\):

\[\begin{align} q_*(s,a) &= \underset{a'}{\max} \sum_{r,s'}p(s',r|s,a) \left[ r+\gamma q_*(s',a')\right], \\ &= \mathbb{E}_{\pi^*}[r|s,a]+ \gamma \, \sum_{r,s'}p(s',r|s,a) \left( \underset{a'}{\max} q_*(s',a') \right) \tag{16.7} \end{align}\]

because one optimal policy is one that maximizes \(q_\pi(s,a)\), for a given state \(s\) and over all possible actions \(a\). This expression is central to a cornerstone algorithm in reinforcement learning called \(Q\)-learning (the formal proof of convergence is outlined in Watkins and Dayan (1992)). In \(Q\)-learning, the state-action function no longer depends on policy and is written with capital \(Q\). The process is the following:

Initialize values \(Q(s,a)\) for all states \(s\) and actions \(a\). For each episode:

\[ (\textbf{QL}) \quad \left\{

\begin{array}{l}

\text{0. Initialize state } S_0 \text{ and for each iteration } i \text{ until the end of the episode;} \\

\text{1. observe state } s_i; \\

\text{2. perform action } a_i \text{(depending on } Q); \\

\text{3. receive reward }r_{i+1} \text{ and observe state } s_{i+1}; \\

\text{4. Update } Q \text{ as follows: }

\end{array} \right.\]

\[\begin{equation} \tag{16.8} Q_{i+1}(s_i,a_i) \longleftarrow Q_i(s_i,a_i) + \eta \left(\underbrace{r_{i+1}+\gamma \, \underset{a}{\max} \, Q_i(s_{i+1},a)}_{\text{echo of Bellman eq.}}-Q_i(s_i,a_i) \right) \end{equation}\]

The underlying reason this update rule works can be linked to fixed point theorems of contraction mappings. If a function \(f\) satisfies \(|f(x)-f(y)|< \delta |x-y|\) (Lipshitz continuity), then a fixed point \(z\) satisfying \(f(z)=z\) can be iteratively obtained via \(z \leftarrow f(z)\). This updating rule converges to the fixed point. Equation (16.7) can be solved using a similar principle, except that a learning rate \(\eta\) slows the learning process but also technically ensures convergence under technical assumptions.

More generally, (16.8) has a form that is widespread in reinforcement learning that is summarized in Equation (2.4) of Sutton and Barto (2018): \[\begin{equation} \tag{16.9} \text{New estimate} \leftarrow \text{Old estimate + Step size (}i.e., \text{ learning rate)} \times (\text{Target - Old estimate}), \end{equation}\]

where the last part can be viewed as an error term. Starting from the old estimate, the new estimate therefore goes in the ‘right’ (or sought) direction, modulo a discount term that makes sure that the magnitude of this direction is not too large. The update rule in (16.8) is often referred to as ‘temporal difference’ learning because it is driven by the improvement yielded by estimates that are known at time \(t+1\) (target) versus those known at time \(t\).

One important step of the Q-learning sequence (QL) is the second one where the action \(a_i\) is picked. In RL, the best algorithms combine two features: exploitation and exploration. Exploitation is when the machine uses the current information at its disposal to choose the next action. In this case, for a given state \(s_i\), it chooses the action \(a_i\) that maximizes the expected reward \(Q_i(s_i,a_i)\). While obvious, this choice is not optimal if the current function \(Q_i\) is relatively far from the true \(Q\). Repeating the locally optimal strategy is likely to favor a limited number of actions, which will narrowly improve the accuracy of the \(Q\) function.

In order to gather new information stemming from actions that have not been tested much (but that can potentially generate higher rewards), exploration is needed. This is when an action \(a_i\) is chosen randomly. The most common way to combine these two concepts is called \(\epsilon\)-greedy exploration. The action \(a_i\) is assigned according to:

\[\begin{equation} \tag{16.10} a_i=\left\{ \begin{array}{c l} \underset{a}{\text{argmax}} \ Q_i(s_i,a) & \text{ with probability } 1-\epsilon \\ \text{randomly (uniformly) over } \mathcal{A} & \text{ with probability } \epsilon \end{array}\right. . \end{equation}\]

Thus, with probability \(\epsilon\), the algorithm explores and with probability \(1-\epsilon\), it exploits the current knowledge of the expected reward and picks the best action. Because all actions have a non-zero probability of being chosen, the policy is called “soft”. Indeed, then best action has a probability of selection equal to \(1-\epsilon(1-\text{card}(\mathcal{A})^{-1})\), while all other actions are picked with probability \(\epsilon/\text{card}(\mathcal{A})\).

16.1.3 SARSA

In \(Q\)-learning, the algorithm seeks to find the action-value function of the optimal policy. Thus, the policy that is followed to pick actions is different from the one that is learned (via \(Q\)). Such algorithms are called off-policy. On-policy algorithms seek to improve the estimation of the action-value function \(q_\pi\) by continuously acting according to the policy \(\pi\). One canonical example of on-policy learning is the SARSA method which requires two consecutive states and actions SARSA. The way the quintuple \((S_t,A_t,R_{t+1}, S_{t+1}, A_{t+1})\) is processed is presented below.

The main difference between \(Q\) learning and SARSA is the update rule. In SARSA, it is given by \[\begin{equation} \tag{16.11} Q_{i+1}(s_i,a_i) \longleftarrow Q_i(s_i,a_i) + \eta \left(r_{i+1}+\gamma \, Q_i(s_{i+1},a_{i+1})-Q_i(s_i,a_i) \right) \end{equation}\]

The improvement comes only from the local point \(Q_i(s_{i+1},a_{i+1})\) that is based on the new states and actions (\(s_{i+1},a_{i+1}\)), whereas in \(Q\)-learning, it comes from all possible actions of which only the best is retained \(\underset{a}{\max} \, Q_i(s_{i+1},a)\).

A more robust but also more computationally demanding version of SARSA is expected SARSA in which the target \(Q\) function is averaged over all actions: \[\begin{equation} \tag{16.12} Q_{i+1}(s_i,a_i) \longleftarrow Q_i(s_i,a_i) + \eta \left(r_{i+1}+\gamma \, \sum_a \pi(a,s_{i+1}) Q_i(s_{i+1},a) -Q_i(s_i,a_i) \right) \end{equation}\]

Expected SARSA is less volatile than SARSA because the latter is strongly impacted by the random choice of \(a_{i+1}\). In expected SARSA, the average smoothes the learning process.

16.2 The curse of dimensionality

Let us first recall that reinforcement learning is a framework that is not linked to a particular algorithm. In fact, different tools can very well co-exist in a RL task (AlphaGo combined both tree methods and neural networks, see Silver et al. (2016)). Nonetheless, any RL attempt will always rely on the three key concepts: the states, actions and rewards. In factor investing, they are fairly easy to identify, though there is always room for interpretation. Actions are evidently defined by portfolio compositions. The states can be viewed as the current values that describe the economy: as a first-order approximation, it can be assumed that the feature levels fulfill this role (possibly conditioned or complemented with macro-economic data). The rewards are even more straightforward. Returns or any relevant performance metric34 can account for rewards.

A major problem lies in the dimensionality of both states and actions. Assuming an absence of leverage (no negative weights), the actions take values on the simplex \[\begin{equation} \tag{16.13} \mathbb{S}_N=\left\{ \mathbf{x} \in \mathbb{R}^N\left|\sum_{n=1}^Nx_n=1, \ x_n\ge 0, \ \forall n=1,\dots,N \right.\right\} \end{equation}\] and assuming that all features have been uniformized, their space is \([0,1]^{NK}\). Needless to say, the dimensions of both spaces are numerically impractical.

A simple solution to this problem is discretization: each space is divided into a small number of categories. Some authors do take this route. In S. Y. Yang, Yu, and Almahdi (2018), the state space is discretized into three values depending on volatility, and actions are also split into three categories. Bertoluzzo and Corazza (2012), Xiong et al. (2018) and Taghian, Asadi, and Safabakhsh (2020) also choose three possible actions (buy, hold, sell). In Almahdi and Yang (2019), the learner is expected to yield binary signals for buying or shorting. Garcı́a-Galicia, Carsteanu, and Clempner (2019) consider a larger state space (8 elements) but restrict the action set to 3 options.35 In terms of the state space, all articles assume that the state of the economy is determined by prices (or returns).

One strong limitation of these approaches is the marked simplification they imply. Realistic discretizations are numerically intractable when investing in multiple assets. Indeed, splitting the unit interval in \(h\) points yields \(h^{NK}\) possibilities for feature values. The number of options for weight combinations is exponentially increasing with \(N\). As an example: just 10 possible values for 10 features of 10 stocks yield \(10^{100}\) permutations.

The problems mentioned above are of course not restricted to portfolio construction. Many solutions have been proposed to solve Markov Decision Processes in continuous spaces. We refer for instance to Section 4 in Powell and Ma (2011) for a review of early methods (outside finance).

This curse of dimensionality is accompanied by the fundamental question of training data. Two options are conceivable: market data versus simulations. Under a given controlled generator of samples, it is hard to imagine that the algorithm will beat the solution that maximizes a given utility function. If anything, it should converge towards the static optimal solution under a stationary data generating process (see, e.g., Chaouki et al. (2020) for trading tasks), which is by the way a very strong modelling assumption.

This leaves market data as a preferred solution but even with large datasets, there is little chance to cover all the (actions, states) combinations mentioned above. Characteristics-based datasets have depths that run through a few decades of monthly data, which means several hundreds of time-stamps at most. This is by far too limited to allow for a reliable learning process. It is always possible to generate synthetic data (as in Yu et al. (2019)), but it is unclear that this will solidly improve the performance of the algorithm.

16.3 Policy gradient

16.3.1 Principle

Beyond the discretization of action and state spaces, a powerful trick is parametrization. When \(a\) and \(s\) can take discrete values, action-value functions must be computed for all pairs \((a,s)\), which can be prohibitively cumbersome. An elegant way to circumvent this problem is to assume that the policy is driven by a relatively modest number of parameters. The learning process is then focused on optimizing this set of parameters \(\boldsymbol{\theta}\). We then write \(\pi_{\boldsymbol{\theta}}(a,s)\) for the probability of choosing action \(a\) in state \(s\). One intuitive way to define \(\pi_{\boldsymbol{\theta}}(a,s)\) is to resort to a soft-max form: \[\begin{equation} \tag{16.14} \pi_{\boldsymbol{\theta}}(a,s) = \frac{e^{\boldsymbol{\theta}'\textbf{h}(a,s)}}{\sum_{b}e^{\boldsymbol{\theta}'\textbf{h}(b,s)}}, \end{equation}\] where the output of function \(\textbf{h}(a,s)\), which has the same dimension as \(\boldsymbol{\theta}\) is called a feature vector representing the pair \((a,s)\). Typically, \(\textbf{h}\) can very well be a simple neural network with two input units and an output dimension equal to the length of \(\boldsymbol{\theta}\).

One desired property for \(\pi_{\boldsymbol{\theta}}\) is that it be differentiable with respect to \(\boldsymbol{\theta}\) so that \(\boldsymbol{\theta}\) can be improved via some gradient method. The most simple and intuitive results about policy gradients are known in the case of episodic tasks (finite horizon) for which it is sought to maximize the average gain \(\mathbb{E}_{\boldsymbol{\theta}}[G_t]\) where the gain is defined in Equation (16.3). The expectation is computed according to a particular policy that depends on \(\boldsymbol{\theta}\), this is why we use a simple subscript. One central result is the so-called policy gradient theorem which states that

\[\begin{equation} \tag{16.15} \nabla \mathbb{E}_{\boldsymbol{\theta}}[G_t]=\mathbb{E}_{\boldsymbol{\theta}} \left[G_t\frac{\nabla \pi_{\boldsymbol{\theta}}}{\pi_{\boldsymbol{\theta}}} \right]. \end{equation}\]

This result can then be used for gradient ascent: when seeking to maximize a quantity, the parameter change must go in the upward direction:

\[\begin{equation} \tag{16.16} \boldsymbol{\theta} \leftarrow \boldsymbol{\theta} + \eta \nabla \mathbb{E}_{\boldsymbol{\theta}}[G_t]. \end{equation}\]

This simple update rule is known as the REINFORCE algorithm. One improvement of this simple idea is to add a baseline, and we refer to section 13.4 of Sutton and Barto (2018) for a detailed account on this topic.

16.3.2 Extensions

A popular extension of REINFORCE is the so-called actor-critic (AC) method which combines policy gradient with \(Q\)- or \(v\)-learning. The AC algorithm can be viewed as some kind of mix between policy gradient and SARSA. A central requirement is that the state-value function \(v(\cdot)\) be a differentiable function of some parameter vector \(\textbf{w}\) (it is often taken to be a neural network). The update rule is then

\[\begin{equation} \tag{16.17} \boldsymbol{\theta} \leftarrow \boldsymbol{\theta} + \eta \left(R_{t+1}+\gamma v(S_{t+1},\textbf{w})-v(S_t,\textbf{w}) \right)\frac{\nabla \pi_{\boldsymbol{\theta}}}{\pi_{\boldsymbol{\theta}}}, \end{equation}\] but the trick is that the vector \(\textbf{w}\) must also be updated. The actor is the policy side which is what drives decision making. The critic side is the value function that evaluates the actor’s performance. As learning progresses (each time both sets of parameters are updated), both sides improve. The exact algorithmic formulation is a bit long and we refer to Section 13.5 in Sutton and Barto (2018) for the precise sequence of steps of AC.

Another interesting application of parametric policies is outlined in Aboussalah and Lee (2020). In their article, the authors define a trading policy that is based on a recurrent neural network. Thus, the parameter \(\boldsymbol{\theta}\) in this case encompasses all weights and biases in the network.

Another favorable feature of parametric policies is that they are compatible with continuous sets of actions. Beyond the form (16.14), there are other ways to shape \(\pi_{\boldsymbol{\theta}}\). If \(\mathcal{A}\) is a subset of \(\mathbb{R}\), and \(f_{\boldsymbol{\Omega}}\) is a density function with parameters \(\boldsymbol{\Omega}\), then a candidate form for \(\pi_{\boldsymbol{\theta}}\) is

\[\begin{equation} \tag{16.18} \pi_{\boldsymbol{\theta}} = f_{\boldsymbol{\Omega}(s,\boldsymbol{\theta})}(a), \end{equation}\] in which the parameters \(\boldsymbol{\Omega}\) are in turn functions of the states and of the underlying (second order) parameters \(\boldsymbol{\theta}\).

While the Gaussian distribution (see section 13.7 in Sutton and Barto (2018)) is often a preferred choice, they would require some processing to lie inside the unit interval. One easy way to obtain such values is to apply the normal cumulative distribution function to the output. In H. Wang and Zhou (2019), the multivariate Gaussian policy is theoretically explored, but it assumes no constraint on weights.

Some natural parametric distributions emerge as alternatives. If only one asset is traded, then the Bernoulli distribution can be used to determine whether or not to buy the asset. If a riskless asset is available, the beta distribution offers more flexibility because the values for the proportion invested in the risky asset span the whole interval; the remainder can be invested into the safe asset. When many assets are traded, things become more complicated because of the budget constraint. One ideal candidate is the Dirichlet distribution because it is defined on a simplex (see Equation (16.13)): \[f_{\boldsymbol{\alpha}}(w_1,\dots,w_n)=\frac{1}{B(\boldsymbol{\alpha})}\prod_{n=1}^Nw_n^{\alpha_n-1},\] where \(B(\boldsymbol{\alpha})\) is the multinomial beta function: \[B(\boldsymbol{\alpha})=\frac{\prod_{n=1}^N\Gamma(\alpha_n)}{\Gamma\left(\sum_{n=1}^N\alpha_n \right)}.\]

If we set \(\pi=\pi_{\boldsymbol{\alpha}}=f_{\boldsymbol{\alpha}}\), the link with factors or characteristics can be coded through \({\boldsymbol{\alpha}}\) via a linear form: \[\begin{equation} (\textbf{F1}) \quad \alpha_{n,t}=\theta_{0,t} + \sum_{k=1}^K \theta_{t}^{(k)}x_{t,n}^{(k)}, \end{equation}\] which is highly tractable, but may violate the condition that \(\alpha_{n,t}>0\) for some values of \(\theta_{k,t}\). Indeed, during the learning process, an update in \(\boldsymbol{\theta}\) might yield values that are out of the feasible set of \(\boldsymbol{\alpha}_t\). In this case, it is possible to resort to a trick that is widely used in online learning (see, e.g., section 2.3.1 in Hoi et al. (2018)). The idea is simply to find the acceptable solution that is closest to the suggestion from the algorithm. If we call \(\boldsymbol{\theta}^*\) the result of an update rule from a given algorithm, then the closest feasible vector is \[\begin{equation} \boldsymbol{\theta}= \underset{\textbf{z} \in \Theta(\textbf{x}_t)}{\min} ||\boldsymbol{\theta}^*-\textbf{z}||^2, \end{equation}\] where \(||\cdot||\) is the Euclidean norm and \(\Theta(\textbf{x}_t)\) is the feasible set, that is, the set of vectors \(\boldsymbol{\theta}\) such that the \(\alpha_{n,t}=\theta_{0,t} + \sum_{k=1}^K \theta_{t}^{(k)}x_{t,n}^{(k)}\) are all non-negative.

A second option for the form of the policy, \(\pi^2_{\boldsymbol{\theta}_t}\), is slightly more complex but remains always valid (i.e., has positive \(\alpha_{n,t}\) values): \[\begin{equation} (\textbf{F2}) \quad \alpha_{n,t}=\exp \left(\theta_{0,t} + \sum_{k=1}^K \theta_{t}^{(k)}x_{t,n}^{(k)}\right), \end{equation}\] which is simply the exponential of the first version. With some algebra, it is possible to derive the policy gradients. The policies \(\pi^j_{\boldsymbol{\theta}_t}\) are defined by the Equations \((\textbf{Fj})\) above. Let \(\digamma\) denote the digamma function. Let \(\textbf{1}\) denote the \(\mathbb{R}^N\) vector of all ones. We have \[\begin{align*} \frac{\nabla_{\boldsymbol{\theta}_t} \pi^1_{\boldsymbol{\theta}_t}}{\pi^1_{\boldsymbol{\theta}_t}}&= \sum_{n=1}^N \left( \digamma \left( \textbf{1}'\textbf{X}_t\boldsymbol{\theta}_t \right) - \digamma(\textbf{x}_{t,n}\boldsymbol{\theta}_t) + \ln w_n \right) \textbf{x}_{t,n}' \\ \frac{\nabla_{\boldsymbol{\theta}_t} \pi^2_{\boldsymbol{\theta}_t}}{\pi^2_{\boldsymbol{\theta}_t}}&= \sum_{n=1}^N \left( \digamma \left( \textbf{1}'e^{\textbf{X}_{t}\boldsymbol{\theta}_t} \right) - \digamma(e^{\textbf{x}_{t,n}\boldsymbol{\theta}_t}) + \ln w_n \right) e^{\textbf{x}_{t,n}\boldsymbol{\theta}_t} \textbf{x}_{t,n}' \end{align*}\] where \(e^{\textbf{X}}\) is the element-wise exponential of a matrix \(\textbf{X}\).

The allocation can then either be made by direct sampling, or using the mean of the distribution \((\textbf{1}'\boldsymbol{\alpha})^{-1}\boldsymbol{\alpha}\). Lastly, a technical note: Dirichlet distributions can only be used for small portfolios because the scaling constant in the density becomes numerically intractable for large values of \(N\) (e.g., above 50). More details on this idea are laid out in André and Coqueret (2020).

16.4 Simple examples

16.4.1 Q-learning with simulations

To illustrate the gist of the problems mentioned above, we propose two implementations of \(Q\)-learning. For simplicity, the first one is based on simulations. This helps understand the learning process in a simplified framework. We consider two assets: one risky and one riskless, with return equal to zero. The returns for the risky process follow an autoregressive model of order one (AR(1)): \(r_{t+1}=a+\rho r_t+\epsilon_{t+1}\) with \(|\rho|<1\) and \(\epsilon\) following a standard white noise with variance \(\sigma^2\). In practice, individual (monthly) returns are seldom autocorrelated, but adjusting the autocorrelation helps understand if the algorithm learns correctly (see exercise below).

The environment consists only in observing the past return \(r_t\). Since we seek to estimate the \(Q\) function, we need to discretize this state variable. The simplest choice is to resort to a binary variable: equal to -1 (negative) if \(r_t<0\) and to +1 (positive) if \(r_t\ge 0\). The actions are summarized by the quantity invested in the risky asset. It can take 5 values: 0 (risk-free portfolio), 0.25, 0.5, 0.75 and 1 (fully invested in the risky asset). This is for instance the same choice as in Pendharkar and Cusatis (2018).

The landscape of R libraries for RL is surprisingly sparse. We resort to the package ReinforcementLearning which has an intuitive implementation of \(Q\)-learning (another option would be the reinforcelearn package). It requires a dataset with the usual inputs: state, action, reward and subsequent state. We start by simulating the returns: they drive the states and the rewards (portfolio returns). The actions are sampled randomly. Technically, the main function of the package requires that states and actions be of character type. The data is built in the chunk below.

library(ReinforcementLearning) # Package for RL

set.seed(42) # Fixing the random seed

n_sample <- 10^5 # Number of samples to be generated

rho <- 0.8 # Autoregressive parameter

sd <- 0.4 # Std. dev. of noise

a <- 0.06 * rho # Scaled mean of returns

data_RL <- tibble(returns = a/rho + arima.sim(n = n_sample, # Returns via AR(1) simulation

list(ar = rho),

sd = sd),

action = round(runif(n_sample)*4)/4) %>% # Random action (portfolio)

mutate(new_state = if_else(returns < 0, "neg", "pos"), # Coding of state

reward = returns * action, # Reward = portfolio return

state = lag(new_state), # Next state

action = as.character(action)) %>%

na.omit() # Remove one missing state

data_RL %>% head() # Show first lines## # A tibble: 6 × 5

## returns action new_state reward state

## <dbl> <chr> <chr> <dbl> <chr>

## 1 -0.474 0.5 neg -0.237 neg

## 2 -0.185 0.25 neg -0.0463 neg

## 3 0.146 0.25 pos 0.0364 neg

## 4 0.543 0.75 pos 0.407 pos

## 5 0.202 0.75 pos 0.152 pos

## 6 0.376 0.25 pos 0.0940 posThere are 3 parameters in the implementation of the Q-learning algorithm:

-

\(\eta\), which is the learning rate in the updating Equation (16.8). In ReinforcementLearning, this is coded as alpha;

-

\(\gamma\), the discounting rate for the rewards (also shown in Equation (16.8));

- and \(\epsilon\), which controls the rate of exploration versus exploitation (see Equation (16.10)).

control <- list(alpha = 0.1, # Learning rate

gamma = 0.7, # Discount factor for rewards

epsilon = 0.1) # Exploration rate

fit_RL <- ReinforcementLearning(data_RL, # Main RL function

s = "state",

a = "action",

r = "reward",

s_new = "new_state",

control = control)

print(fit_RL) # Show the output## State-Action function Q

## 0.25 0 1 0.75 0.5

## neg 0.2473169 0.4216894 0.1509653 0.1734538 0.229004

## pos 1.0721669 0.7561417 1.4739050 1.1214795 1.045047

##

## Policy

## neg pos

## "0" "1"

##

## Reward (last iteration)

## [1] 2588.659The output shows the Q function, which depends naturally both on states and actions. When the state is negative, large risky positions (action equal to 0.75 or 1.00) are associated with the smallest average rewards, whereas small positions yield the highest average rewards. When the state is positive, the average rewards are the highest for the largest allocations. The rewards in both cases are almost a monotonic function of the proportion invested in the risky asset. Thus, the recommendation of the algorithm (i.e., the policy) is to be fully invested in a positive state and to refrain from investing in a negative state. Given the positive autocorrelation of the underlying process, this does make sense.

Basically, the algorithm has simply learned that positive (resp. negative) returns are more likely to follow positive (resp. negative) returns. While this is somewhat reassuring, it is by no means impressive, and much simpler tools would yield similar conclusions and guidance.

16.4.2 Q-learning with market data

The second application is based on the financial dataset. To reduce the dimensionality of the problem, we will assume that:

- only one feature (price-to-book ratio) captures the state of the environment. This feature is processed so that is has only a limited number of possible values;

- actions take values over a discrete set consisting of three positions: +1 (buy the market), -1 (sell the market) and 0 (hold no risky positions);

- only two assets are traded: those with stock_id equal to 3 and 4 - they both have 245 days of trading data.

The construction of the dataset is unelegantly coded below.

return_3 <- data_ml %>% filter(stock_id == 3) %>% pull(R1M_Usd) # Return of asset 3

return_4 <- data_ml %>% filter(stock_id == 4) %>% pull(R1M_Usd) # Return of asset 4

pb_3 <- data_ml %>% filter(stock_id == 3) %>% pull(Pb) # P/B ratio of asset 3

pb_4 <- data_ml %>% filter(stock_id == 4) %>% pull(Pb) # P/B ratio of asset 4

action_3 <- floor(runif(length(pb_3))*3) - 1 # Action for asset 3 (random)

action_4 <- floor(runif(length(pb_4))*3) - 1 # Action for asset 4 (random)

RL_data <- tibble(return_3, return_4, # Building the dataset

pb_3, pb_4,

action_3, action_4) %>%

mutate(action = paste(action_3, action_4), # Uniting actions

pb_3 = round(5 * pb_3), # Simplifying states (P/B)

pb_4 = round(5 * pb_4), # Simplifying states (P/B)

state = paste(pb_3, pb_4), # Uniting states

reward = action_3*return_3 + action_4*return_4, # Computing rewards

new_state = lead(state)) %>% # Infer new state

dplyr::select(-pb_3, -pb_4, -action_3, # Remove superfluous vars.

-action_4, -return_3, -return_4)

head(RL_data) # Showing the result## # A tibble: 6 × 4

## action state reward new_state

## <chr> <chr> <dbl> <chr>

## 1 -1 -1 1 1 -0.061 1 1

## 2 0 1 1 1 0 1 1

## 3 -1 0 1 1 -0.018 1 1

## 4 0 -1 1 1 0.011 1 1

## 5 -1 1 1 1 -0.036 1 1

## 6 -1 -1 1 1 -0.056 1 1Actions and states have to be merged to yield all possible combinations. To simplify the states, we round 5 times the price-to-book ratios.

We keep the same hyperparameters as in the previous example. Columns below stand for actions: the first (\(resp.\) second) number notes the position in the first (\(resp.\) second) asset. The rows correspond to states. The scaled P/B ratios are separated by a point (e.g., “X2.3” means that the first (\(resp.\) second) asset has a scaled P/B of 2 (\(resp.\) 3).

fit_RL2 <- ReinforcementLearning(RL_data, # Main RL function

s = "state",

a = "action",

r = "reward",

s_new = "new_state",

control = control)

fit_RL2$Q <- round(fit_RL2$Q, 3) # Round the Q-matrix

print(fit_RL2) # Show the output ## State-Action function Q

## 0 0 0 1 0 -1 -1 -1 -1 0 -1 1 1 -1 1 0 1 1

## 0 2 0.000 0.000 0.000 -0.017 0.000 0.000 0.000 0.002 0.000

## 0 3 0.000 0.000 0.003 0.000 0.000 0.000 0.030 0.000 0.000

## 3 1 0.002 0.000 0.005 0.000 -0.002 0.000 0.000 0.000 0.000

## 2 1 0.005 0.018 0.009 -0.028 0.010 -0.003 0.021 0.008 -0.004

## 2 2 0.000 0.010 0.000 0.014 0.000 0.000 -0.013 0.006 0.000

## 2 3 0.000 0.000 0.000 0.000 0.000 0.020 0.000 -0.034 0.000

## 1 1 0.002 -0.005 -0.022 -0.011 -0.002 -0.009 -0.020 -0.014 -0.023

## 1 2 0.006 0.016 0.006 0.028 -0.001 0.001 0.020 0.020 -0.001

## 1 3 0.001 0.004 0.004 -0.011 0.000 0.003 0.005 0.003 0.010

##

## Policy

## 0 2 0 3 3 1 2 1 2 2 2 3 1 1 1 2 1 3

## "1 0" "1 -1" "0 -1" "1 -1" "-1 -1" "-1 1" "0 0" "-1 -1" "1 1"

##

## Reward (last iteration)

## [1] -1.296The output shows that there are many combinations of states and actions that are not spanned by the data: basically, the \(Q\) function has a zero and it is likely that the combination has not been explored. Some states seem to be more often represented (“X1.1”, “X1.2” and “X2.1”), others, less (“X3.1” and “X3.2”). It is hard to make any sense of the recommendations. Some states close “X0.1” and “X1.1” but the outcomes related to them are very different (buy and short versus hold and buy). Moreover, there is no coherence and no monotonicity in actions with respect to individual state values: low values of states can be associated to very different actions.

One reason why these conclusions do not appear trustworthy pertains to the data size. With only 200+ time points and 99 state-action pairs (11 times 9), this yields on average only two data points to compute the \(Q\) function. This could be improved by testing more random actions, but the limits of the sample size would eventually (rapidly) be reached anyway. This is left as an exercise (see below).

16.5 Concluding remarks

Reinforcement learning has been applied to financial problems for a long time. Early contributions in the late 1990s include Neuneier (1996), Moody and Wu (1997), Moody et al. (1998) and Neuneier (1998). Since then, many researchers in the computer science field have sought to apply RL techniques to portfolio problems. The advent of massive datasets and the increase in dimensionality make it hard for RL tools to adapt well to very rich environments that are encountered in factor investing.

Recently, some approaches seek to adapt RL to continuous action spaces (H. Wang and Zhou (2019), Aboussalah and Lee (2020)) but not to high-dimensional state spaces. These spaces are those required in factor investing because all firms yield hundreds of data points characterizing their economic situation. In addition, applications of RL in financial frameworks have a particularity compared to many typical RL tasks: in financial markets, actions of agents have no impact on the environment (unless the agent is able to perform massive trades, which is rare and ill-advised because it pushes prices in the wrong direction). This lack of impact of actions may possibly mitigate the efficiency of traditional RL approaches.

Those are challenges that will need to be solved in order for RL to become competitive with alternative (supervised) methods. Nevertheless, the progressive (online-like) way RL works seems suitable for non-stationary environments: the algorithm slowly shifts paradigms as new data arrives. In stationary environments, it has been shown that RL manages to converge to optimal solutions (Kong et al. (2019), Chaouki et al. (2020)). Therefore, in non-stationary markets, RL could be a recourse to build dynamic predictions that adapt to changing macroeconomic conditions. More research needs to be carried out in this field on large dimensional datasets.

We end this chapter by underlining that reinforcement learning has also been used to estimate complex theoretical models (Halperin and Feldshteyn (2018), Garcı́a-Galicia, Carsteanu, and Clempner (2019)). The research in the field is incredibly diversified and is orientated towards many directions. It is likely that captivating work will be published in the near future.

16.6 Exercises

Test what happens if the process for generating returns has a negative autocorrelation. What is the impact on the \(Q\) function and the policy?

Keeping the same 2 assets as in Section 16.4.2, increases the size of RL_data by testing all possible action combinations for each original data point. Re-run the \(Q\)-learning function and see what happens.