Chapter 2 Introduction¶

Conclusions often echo introductions. This chapter was completed at the very end of the writing of the book. It outlines principles and ideas that are probably more relevant than the sum of technical details covered subsequently. When stuck with disappointing results, we advise the reader to take a step away from the algorithm and come back to this section to get a broader perspective of some of the issues in predictive modelling.

2.1 Context¶

The blossoming of machine learning in factor investing has it source at the confluence of three favorable developments: data availability, computational capacity, and economic groundings.

First, the data. Nowadays, classical providers, such as Bloomberg and Reuters have seen their playing field invaded by niche players and aggregation platforms.4 In addition, high-frequency data and derivative quotes have become mainstream. Hence, firm-specific attributes are easy and often cheap to compile. This means that the size of $\mathbf{X}$ in (2.1) is now sufficiently large to be plugged into ML algorithms. The order of magnitude (in 2019) that can be reached is the following: a few hundred monthly observations over several thousand stocks (US listed at least) covering a few hundred attributes. This makes a dataset of dozens of millions of points. While it is a reasonably high figure, we highlight that the chronological depth is probably the weak point and will remain so for decades to come because accounting figures are only released on a quarterly basis. Needless to say that this drawback does not hold for high-frequency strategies.

Second, computational power, both through hardware and software. Storage and processing speed are not technical hurdles anymore and models can even be run on the cloud thanks to services hosted by major actors (Amazon, Microsoft, IBM and Google) and by smaller players (Rackspace, Techila). On the software side, open source has become the norm, funded by corporations (TensorFlow & Keras by Google, Pytorch by Facebook, h2o, etc.), universities (Scikit-Learn by INRIA, NLPCore by Stanford, NLTK by UPenn) and small groups of researchers (caret, xgboost, tidymodels to list but a pair of frameworks). Consequently, ML is no longer the private turf of a handful of expert computer scientists, but is on the contrary accessible to anyone willing to learn and code.

Finally, economic framing. Machine learning applications in finance were initially introduced by computer scientists and information system experts (e.g., Braun and Chandler (1987), White (1988)) and exploited shortly after by academics in financial economics (Bansal and Viswanathan (1993)), and hedge funds (see, e.g., Zuckerman (2019)). Nonlinear relationships then became more mainstream in asset pricing (Freeman and Tse (1992), Bansal, Hsieh, and Viswanathan (1993)). These contributions started to pave the way for the more brute-force approaches that have blossomed since the 2010 decade and which are mentioned throughout the book.

In the synthetic proposal of R. Arnott, Harvey, and Markowitz (2019), the first piece of advice is to rely on a model that makes sense economically. We agree with this stance, and the only assumption that we make in this book is that future returns depend on firm characteristics. The relationship between these features and performance is largely unknown and probably time-varying. This is why ML can be useful: to detect some hidden patterns beyond the documented asset pricing anomalies. Moreover, dynamic training allows to adapt to changing market conditions.

2.2 Portfolio construction: the workflow¶

Building successful portfolio strategies requires many steps. This book covers many of them but focuses predominantly on the prediction part. Indeed, allocating to assets most of the time requires to make bets and thus to presage and foresee which ones will do well and which ones will not. In this book, we mostly resort to supervised learning to forecast returns in the cross-section. The baseline equation in supervised learning,

is translated in financial terms as

where $f(\mathbf{x}_{t,n})$ can be viewed as the expected return for time $t+1$ computed at time $t$, that is, $\mathbb{E}_t[r_{t+1,n}]$. Note that the model is common to all assets ($f$ is not indexed by $n$), thus it shares similarity with panel approaches.

Building accurate predictions requires to pay attention to all terms in the above equation. Chronologically, the first step is to gather data and to process it (see Chapter 4). To the best of our knowledge, the only consensus is that, on the $\textbf{x}$ side, the features should include classical predictors reported in the literature: market capitalization, accounting ratios, risk measures, momentum proxies (see Chapter 3). For the dependent variable, many researchers and practitioners work with monthly returns, but other maturities may perform better out-of-sample.

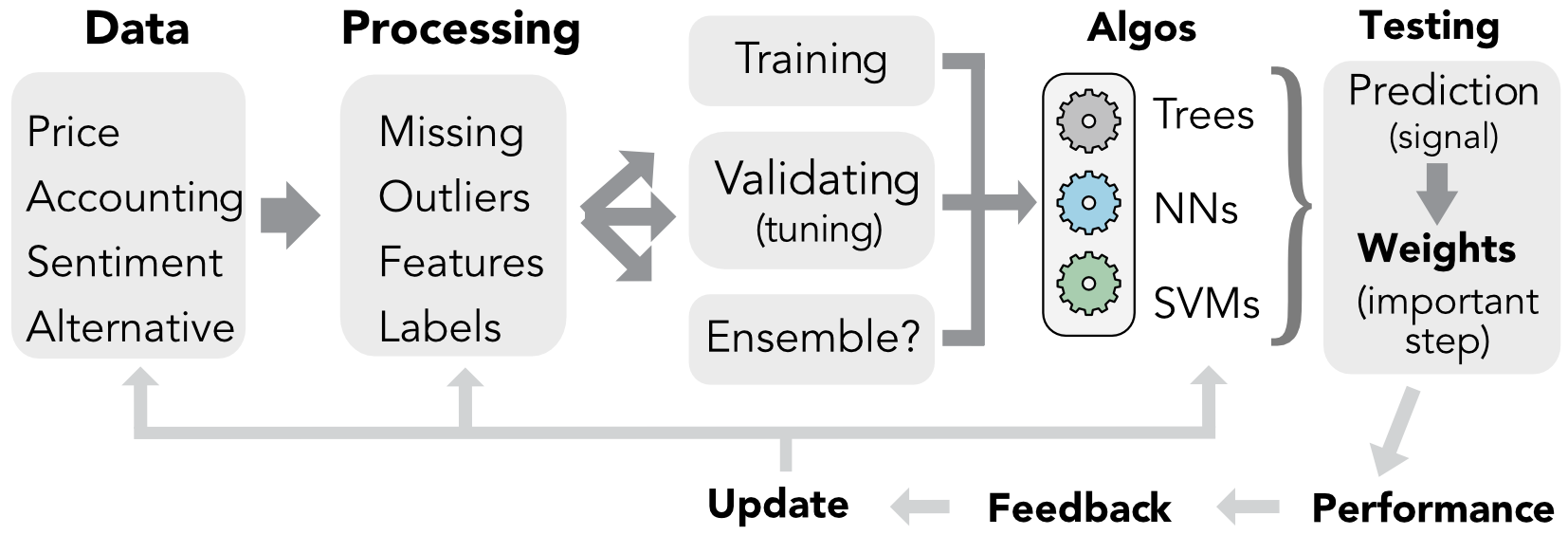

FIGURE 2.1: Simplified workflow in ML-based portfolio construction.

FIGURE 2.1: Simplified workflow in ML-based portfolio construction.

2.3 Machine Learning is no magic wand¶

By definition, the curse of predictions is that they rely on past data to infer patterns about subsequent fluctuations. The more or less explicit hope of any forecaster is that the past will turn out to be a good approximation of the future. Needless to say, this is a pious wish; in general, predictions fare badly. Surprisingly, this does not depend much on the sophistication of the econometric tool. In fact, heuristic guesses are often hard to beat.

To illustrate this sad truth, the baseline algorithms that we detail in Chapters 5 to 7 yield at best mediocre results. This is done on purpose. This forces the reader to understand that blindly feeding data and parameters to a coded function will seldom suffice to reach satisfactory out-of-sample accuracy.

In machine learning, models are estimated on one portion of data (training set) and then tested on another portion of the data (testing set) to assess their quality. We split our sample accordingly.

Below, we sum up some key points that we have learned through our exploratory journey in financial ML.

- The first point is that causality is key. If one is able to identify $X \rightarrow y$, where $y$ are expected returns, then the problem is solved. Unfortunately, causality is incredibly hard to uncover.

- Thus, researchers have most of the time to make do with simple correlation patterns, which are far less informative and robust.

- Relatedly, financial datasets are extremely noisy. It is a daunting task to extract signals out of them. No-arbitrage reasonings imply that if a simple pattern yielded durable profits, it would mechanically and rapidly vanish.

- The no-free lunch theorem of Wolpert (1992a) imposes that the analyst formulates views on the model. This is why economic or econometric framing is key. The assumptions and choices that are made regarding both the dependent variables and the explanatory features are decisive. As a corollary, data is key. The inputs given to the models are probably much more important than the choice of the model itself.

- To maximize out-of-sample efficiency, the right question is probably to paraphrase Jeff Bezos: what’s not going to change? Persistent series are more likely to unveil enduring patterns.

- Everybody makes mistakes. Errors in loops or variable indexing are part of the journey. What matters is to learn from those lapses.

To conclude, we remind the reader of this obvious truth: nothing will ever replace practice. Gathering and cleaning data, coding backtests, tuning ML models, testing weighting schemes, debugging, starting all over again: these are all absolutely indispensable steps and tasks that must be repeated indefinitely. There is no sustitute to experience.

References¶

Arnott, Rob, Campbell R Harvey, and Harry Markowitz. 2019. “A Backtesting Protocol in the Era of Machine Learning.” Journal of Financial Data Science 1 (1): 64–74.

Bansal, Ravi, David A Hsieh, and S Viswanathan. 1993. “A New Approach to International Arbitrage Pricing.” Journal of Finance 48 (5): 1719–47.

Bansal, Ravi, and Salim Viswanathan. 1993. “No Arbitrage and Arbitrage Pricing: A New Approach.” Journal of Finance 48 (4): 1231–62.

Braun, Helmut, and John S Chandler. 1987. “Predicting Stock Market Behavior Through Rule Induction: An Application of the Learning-from-Example Approach.” Decision Sciences 18 (3): 415–29.

Freeman, Robert N, and Senyo Y Tse. 1992. “A Nonlinear Model of Security Price Responses to Unexpected Earnings.” Journal of Accounting Research, 185–209.

White, Halbert. 1988. “Economic Prediction Using Neural Networks: The Case of Ibm Daily Stock Returns.”

Wolpert, David H. 1992a. “On the Connection Between in-Sample Testing and Generalization Error.” Complex Systems 6 (1): 47.

Zuckerman, Gregory. 2019. The Man Who Solved the Market: How Jim Simons Launched the Quant Revolution. Penguin Random House.

4. We refer to for a list of alternative data providers. Moreover, we recall that Quandl, an alt-data hub was acquired by Nasdaq in December 2018. As large players acquire newcomers, the field may consolidate.

- Other approaches are nonetheless possible, as is advocated in Prado and Fabozzi