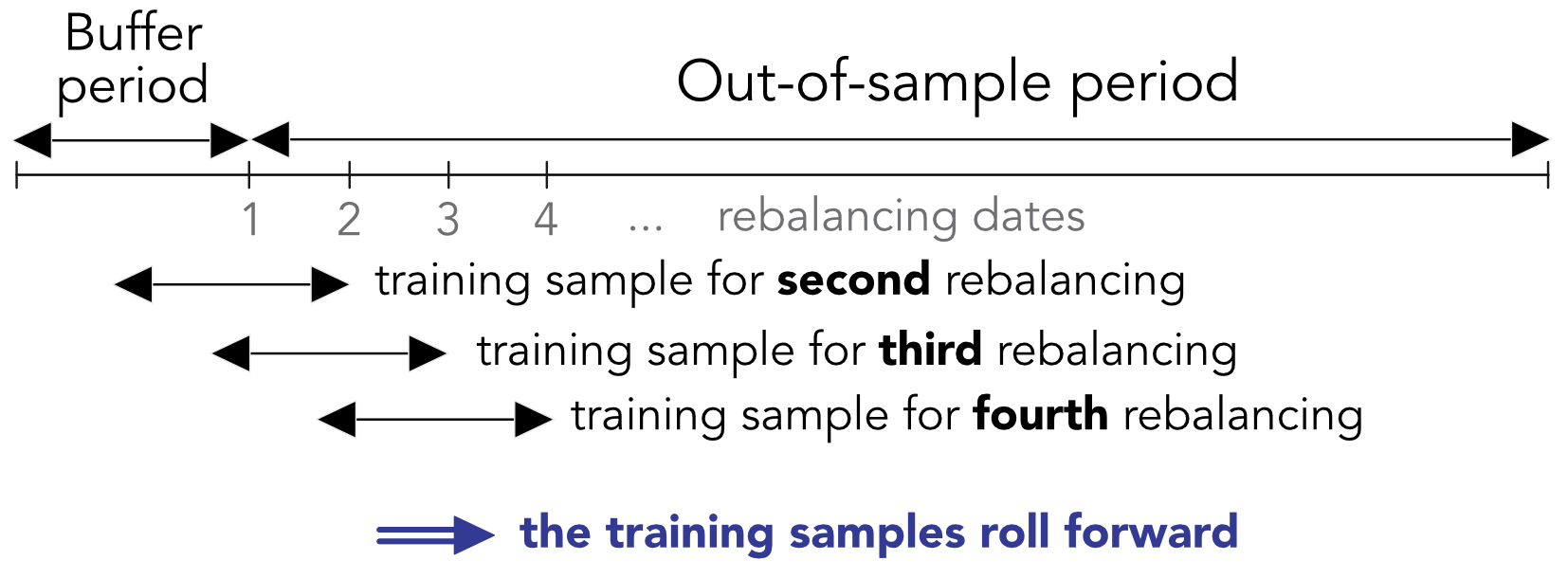

FIGURE 12.1: Backtesting with rolling windows. The training set of the first period is simply the buffer period.

FIGURE 12.1: Backtesting with rolling windows. The training set of the first period is simply the buffer period.

In this section, we introduce the notations and framework that will be used when analyzing and comparing investment strategies. Portfolio backtesting is often conceived and perceived as a quest to find the best strategy - or at least a solidly profitable one. When carried out thoroughly, this possibly long endeavor may entice the layman to confuse a fluke for a robust policy. Two papers published back-to-back warn against the perils of data snooping, which is related to $p$-hacking. In both cases, the researcher will torture the data until the sought result is found.

Fabozzi and Prado (2018) acknowledge that only strategies that work make it to the public, while thousands (at least) have been tested. Picking the pleasing outlier (the only strategy that seemed to work) is likely to generate disappointment when switching to real trading. In a similar vein, R. Arnott, Harvey, and Markowitz (2019) provide a list of principles and safeguards that any analyst should follow to avoid any type of error when backtesting strategies. The worst type is arguably false positives whereby strategies are found (often by cherrypicking) to outperform in one very particular setting, but will likely fail in live implementation.

In addition to these recommendations on portfolio constructions, R. Arnott et al. (2019) also warn against the hazards of blindly investing in smart beta products related to academic factors. Plainly, expectations should not be set too high or face the risk of being disappointed. Another takeaway from their article is that economic cycles have a strong impact on factor returns: correlations change quickly and drawdowns can be magnified in times of major downturns.

Backtesting is more complicated than it seems and it is easy to make small mistakes that lead to apparently good portfolio policies. This chapter lays out a rigorous approach to this exercise, discusses a few caveats, and proposes a lengthy example.

We consider a dataset with three dimensions: time $t=1,\dots,T$, assets $n=1,\dots,N$ and characteristics $k=1,\dots,K$. One of these attributes must be the price of asset $n$ at time $t$, which we will denote $p_{t,n}$. From that, the computation of the arithmetic return is straightforward ($r_{t,n}=p_{t,n}/p_{t-1,n}-1$) and so is any heuristic measure of profitability. For simplicity, we assume that time points are equidistant or uniform, i.e., that $t$ is the index of a trading day or of a month for example. If each point in time $t$ has data available for all assets, then this makes a dataset with $I=T\times N$ rows.

The dataset is first split in two: the out-of-sample period and the initial buffer period. The buffer period is required to train the models for the first portfolio composition. This period is determined by the size of the training sample. There are two options for this size: fixed (usually equal to 2 to 10 years) and expanding. In the first case, the training sample will roll over time, taking into account only the most recent data. In the second case, models are built on all of the available data, the size of which increases with time. This last option can create problems because the first dates of the backtest are based on much smaller amounts of information compared to the last dates. Moreover, there is an ongoing debate on whether including the full history of returns and characteristics is advantageous or not. Proponents argue that this allows models to see many different market conditions. Opponents make the case that old data is by definition outdated and thus useless and possibly misleading because it won’t reflect current or future short-term fluctuations.

Henceforth, we choose the rolling period option for the training sample, as depicted in Figure 12.1.

FIGURE 12.1: Backtesting with rolling windows. The training set of the first period is simply the buffer period.

FIGURE 12.1: Backtesting with rolling windows. The training set of the first period is simply the buffer period.

Two crucial design choices are the rebalancing frequency and the horizon at which the label is computed. It is not obvious that they should be equal but their choice should make sense. It can seem right to train on a 12-month forward label (which captures longer trends) and invest monthly or quarterly. However, it seems odd to do the opposite and train on short-term movements (monthly) and invest at a long horizon.

These choices have a direct impact on how the backtest is carried out. If we note:

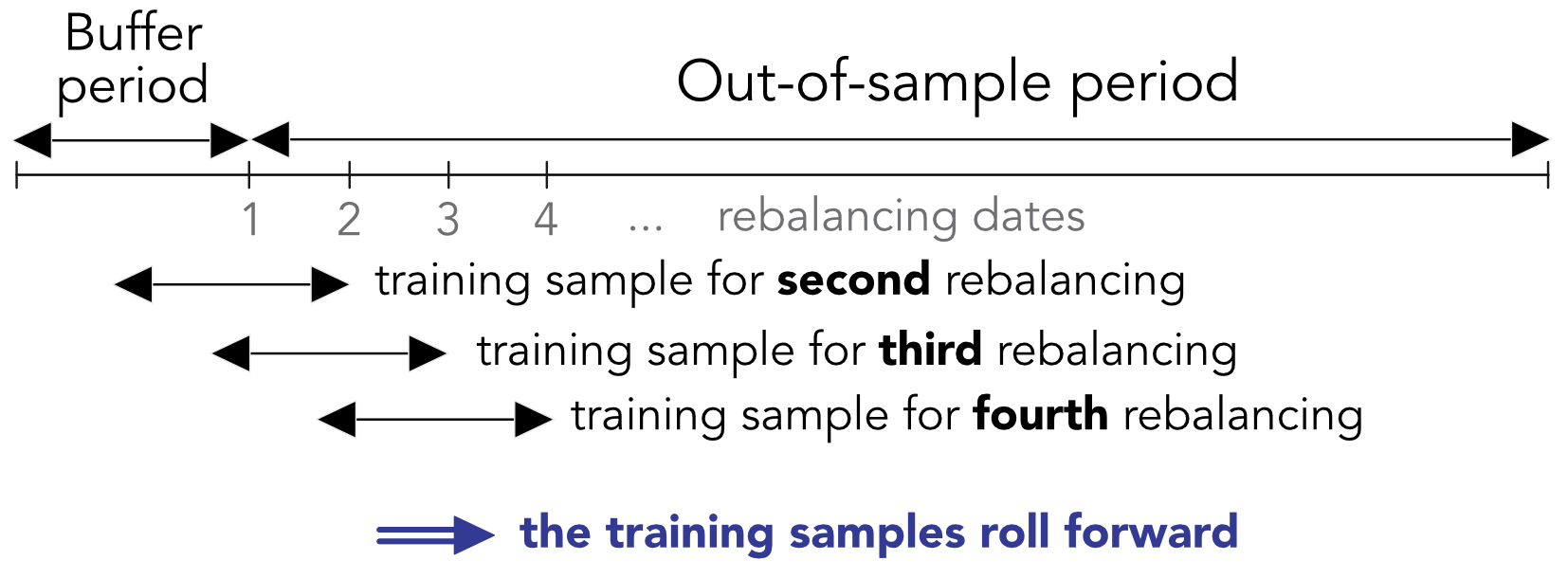

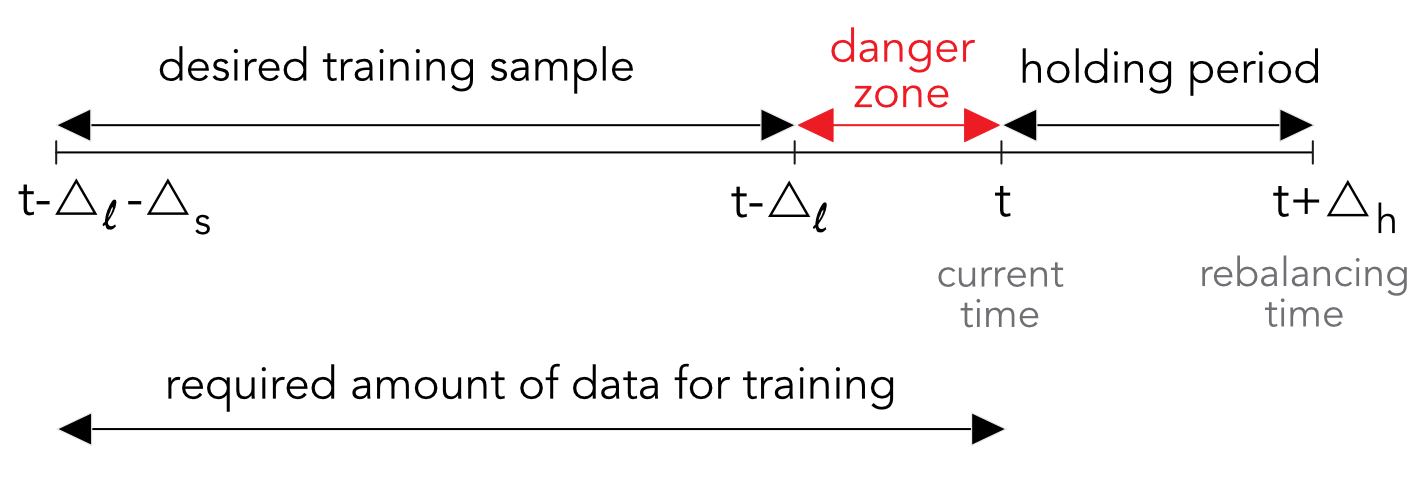

then the total length of the training sample should be $\Delta_s+\Delta_l$. Indeed, at any moment $t$, the training sample should stop at $t-\Delta_l$ so that the last point corresponds to a label that is calculated until time $t$. This is highlighted in Figure 12.2 in the form of the red danger zone. We call it the red zone because any observation which has a time index $s$ inside the interval $(t-\Delta_l,t]$ will engender a forward looking bias. Indeed if a feature is indexed by $s \in (t-\Delta_l,t]$, then by definition, the label covers the period $[s,s+\Delta_l]$ with $s+\Delta_l>t$. At time $t$, this requires knowledge of the future and is naturally not realistic.

FIGURE 12.2: The subtleties in rolling training samples.

FIGURE 12.2: The subtleties in rolling training samples.

The predictive tools outlined in Chapters 5 to 11 are only meant to provide a signal that is expected to give some information on the future profitability of assets. There are many ways that this signal can be integrated in an investment decision (see Snow (2020) for ways to integrate ML tools into this task).

First and foremost, there are at least two steps in the portfolio construction process and the signal can be used at any of these stages. Relying on the signal for both steps puts a lot of emphasis on the predictions and should only be considered when the level of confidence in the forecasts is high.

The first step is selection. While a forecasting exercise can be carried out on a large number of assets, it is not compulsory to invest in all of these assets. In fact, for long-only portfolios, it would make sense to take advantage of the signal to exclude those assets that are presumably likely to underperform in the future. Often, portfolio policies have fixed sizes that impose a constant number of assets. One heuristic way to exploit the signal is to select the assets that have the most favorable predictions and to discard the others. This naive idea is often used in the asset pricing literature: portfolios are formed according to the quantiles of underlying characteristics and some characteristics are deemed interesting if the corresponding sorted portfolios exhibit very different profitabilities (e.g., high average return for high quantiles versus low average return for low quantiles).

This is for instance an efficient way to test the relevance of the signal. If $Q$ portfolios $q=1,\dots,Q$ are formed according to the rankings of the assets with respect to the signal, then one would expect that the out-of-sample performance of the portfolios be monotonic with $q$. While a rigorous test of monotonicity would require to account for all portfolios (see, e.g., Romano and Wolf (2013)), it is often only assumed that the extreme portfolios suffice. If the difference between portfolio number 1 and portfolio number $Q$ is substantial, then the signal is valuable. Whenever the investor is able to short assets, this amounts to a dollar neutral strategy.

The second step is weighting. If the selection process relied on the signal, then a simple weighting scheme is often a good idea. Equally weighted portfolios are known to be hard to beat (see DeMiguel, Garlappi, and Uppal (2009)), especially compared to their cap-weighted alternative, as is shown in Plyakha, Uppal, and Vilkov (2016). More advanced schemes include equal risk contributions (Maillard, Roncalli, and Teiletche (2010)) and constrained minimum variance (Coqueret (2015)). Both only rely on the covariance matrix of the assets and not on any proxy for the vector of expected returns.

For the sake of completeness, we explicitize a generalization of Coqueret (2015) which is a generic constrained quadratic program:

where it is easy to recognize the usual mean-variance optimization in the left-hand side. We impose three constraints on the right-hand side.23 The first one is the budget constraint (weights sum to one). The second one penalizes variations in weights (compared to the current allocation, $\textbf{w}_-$) via a diagonal matrix $\Lambda$ that penalizes trading costs. This is a crucial point. Portfolios are rarely constructed from scratch and are most of the time adjustments from existing positions. In order to reduce the orders and the corresponding transaction costs, it is possible to penalize large variations from the existing portfolio. In the above program, the current weights are written $\textbf{w}_-$ and the desired ones $\textbf{w}$ so that $\textbf{w}-\textbf{w}_-$ is the vector of deviations from the current positions. The term $(\textbf{w}-\textbf{w}_-)'\boldsymbol{\Lambda}(\textbf{w}-\textbf{w}_-)$ is an expression that characterizes the sum of squared deviations, weighted by the diagonal coefficients $\Lambda_{n,n}$. This can be helpful because some assets may be more costly to trade due to liquidity (large cap stocks are more liquid and their trading costs are lower). When $\delta_R$ decreases, the rotation is reduced because weights are not allowed too deviate too much from $\delta_R$. The last constraint enforces diversification via the Herfindhal-Hirschmann index of the portfolio: the smaller $\delta_D$, the more diversified the portfolio.

Recalling that there are $N$ assets in the universe, the Lagrange form of (12.1) is:

and the first order condition

yields

with

This parameter ensures that the budget constraint is satisfied. The optimal weights in (12.3) depend on three tuning parameters: $\lambda$, $\kappa_R$ and $\kappa_D$.

This seemingly complex formula is in fact very flexible and tractable. It requires some tests and adjustments before finding realistic values for

$\lambda$, $kappa_R$ and $kappa_D$ (see exercise at the end of the chapter). In Pedersen, Babu, and Levine (2020), the authors recommend a similar form, except that the covariance matrix is shrunk towards the diagonal matrix of sample variances and the expected returns are mix between a signal and an anchor portfolio. The authors argue that their general formulation has links with robust optimization (see also Kim, Kim, and Fabozzi (2014)), Bayesian inference (Lai et al. (2011)), matrix denoising via random matrix theory, and, naturally, shrinkage. In fact, shrunk expected returns have been around for quite some time (Jorion (1985), Kan and Zhou (2007) and Bodnar, Parolya, and Schmid (2013)) and simply seek to diversify and reduce estimation risk.

The evaluation of performance is a key stage in a backtest. This section, while not exhaustive, is intended to cover the most important facets of portfolio assessment.

While the evaluation of the accuracy of ML tools (See Section 10.1) is of course valuable (and imperative!), the portfolio returns are the ultimate yardstick during a backtest. One essential element in such an exercise is a benchmark because raw and absolute metrics don’t mean much on their own.

This is not only true at the portfolio level, but also at the ML engine level. In most of the trials of the previous chapters, the MSE of the models on the testing set revolves around 0.037. An interesting figure is the variance of one-month returns on this set, which corresponds to the error made by a constant prediction of 0 all the time. This figure is equal to 0.037, which means that the sophisticated algorithms don’t really improve on a naive heuristic. This benchmark is the one used in the out-of-sample $R^2$ of Gu, Kelly, and Xiu (2020b).

In portfolio choice, the most elementary allocation is the uniform one, whereby each asset receives the same weight. This seemingly simplistic solution is in fact an incredible benchmark, one that is hard to beat consistently (see DeMiguel, Garlappi, and Uppal (2009) and Plyakha, Uppal, and Vilkov (2016)). Theoretically, uniform portfolios are optimal when uncertainty, ambiguity or estimation risk is high (Pflug, Pichler, and Wozabal (2012), Maillet, Tokpavi, and Vaucher (2015)) and empirically, it cannot be outperformed even at the factor level (Dichtl, Drobetz, and Wendt (2020)). Below, we will pick an equally weighted (EW) portfolio of all stocks as our benchmark.

We then turn to the definition of the usual metrics used both by practitioners and academics alike. Henceforth, we write $r^P=(r_t^P)_{1\le t\le T}$ and $r^B=(r_t^B)_{1\le t\le T}$ for the returns of the portfolio and those of the benchmark, respectively. When referring to some generic returns, we simply write $r_t$. There are many ways to analyze them and most of them rely on their distribution.

The simplest indicator is the average return:

where, obviously, the portfolio is noteworthy if $\mathbb{E}[r^P]>\mathbb{E}[r^B]$. Note that we use the arithmetic average above but the geometric one is also an option, in which case:

The benefit of this second definition is that it takes the compounding of returns into account and hence compensates for volatility pumping. To see this, consider a very simple two-period model with returns $−r$ and $+r$. The arithmetic average is zero, but the geometric one $\sqrt{1-r^2}-1$ is negative.

Akin to accuracy, it ratios evaluate the proportion of times when the position is in the right direction (long when the realized return is positive and short when it is negative). Hence hit ratios evaluate the propensity to make good guesses. This can be computed at the asset level (the proportion of positions in the correct direction24) or at the portfolio level. In all cases, the computation can be performed on raw returns or on relative returns (e.g., compared to a benchmark). A meaningful hit ratio is the proportion of times that a strategy beats its benchmark. This is of course not sufficient, as many small gains can be offset by a few large losses.

Lastly, one important precision. In all examples of supervised learning tools in the book, we compared the hit ratios to 0.5. This is in fact wrong because if an investor is bullish, he or she may always bet on upward moves. In this case, the hit ratio is the percentage of time that returns are positive. Over the long run, this probability is above 0.5. In our sample, it is equal to 0.556, which is well above 0.5. This could be viewed as a benchmark to be surpassed.

Pure performance measures are almost always accompanied by risk measures. The second moment of returns is usually used to quantify the magnitude of fluctuations of the portfolio. A large variance implies sizable movements in returns, and hence in portfolio values. This is why the standard deviation of returns is called the volatility of the portfolio.

In this case, the portfolio can be preferred if it is less risky compared to the benchmark, i.e., when $\sigma_P^2<\sigma_B^2$ and when average returns are equal (or comparable).

Higher order moments of returns are sometimes used (skewness and kurtosis), but they are far less common. We refer for instance to Harvey et al. (2010) for one method that takes them into account in the portfolio construction process

For some people, the volatility is an incomplete measure of risk. It can be argued that it should be decomposed into ‘good’ volatility (when prices go up) versus ‘bad’ volatility when they go down. The downward semi-variance is computed as the variance taken over the negative returns:

The average return and the volatility are the typical moment-based metrics used by practitioners. Other indicators rely on different aspects of the distribution of returns with a focus on tails and extreme events. The Value-at-Risk (VaR) is one such example. If $F_r$ is the empirical cdf of returns, the VaR at a level of confidence $\alpha$ (often taken to be 95%) is

It is equal to the realization of a bad scenario (of return) that is expected to happen $(1-\alpha)$% of the time on average. An even more conservative measure is the so-called Conditional Value at Risk (CVaR), also known as expected shortfall, which computes the average loss of the worst $(1-\alpha)$% scenarios. Its empirical evaluation is

Going crescendo in the severity of risk measures, the ultimate evaluation of loss is the maximum drawdown. It is equal to the maximum loss suffered from the peak value of the strategy. If we write $P_t$ for the time-$t$ value of a portfolio, the drawdown is

and the maximum drawdown is

In the spirit of factor models, performance can also be assessed through the lens of exposures. If we recall the original formulation from Equation (3.1):

then the estimated $\hat{\alpha}_n$ is the performance that cannot be explained by the other factors. When returns are excess returns (over the risk-free rate) and when there is only one factor, the market factor, then this quantity is called Jensen’s alpha (Jensen (1968)). Often, it is simply referred to as alpha. The other estimate, $\hat{\beta}_{t,M,n}$($M$ for market), is the market beta.

Because of the rise of factor investing, it has become customary to also report the alpha of more exhaustive regressions. Adding the size and value premium (as in Fama and French (1993)) and even momentum (Carhart (1997)) helps understand if a strategy generates value beyond that which can be obtained through the usual factors.

Now, the tradeoff between the average return and the volatility is a cornerstone in modern finance, since Markowitz (1952). The simplest way to synthesize both metrics is via the information ratio:

where the index $P-B$ implies that the mean and standard deviations are computed on the long-short portfolio with returns $r_t^P-r_t^B$. The denominator $\sigma_{P-B}$ is sometimes called the tracking error.

The most widespread information ratio is the Sharpe ratio (Sharpe (1966)) for which the benchmark is some riskless asset. Instead of directly computing the information ratio between two portfolios or strategies, it is often customary to compare their Sharpe ratios. Simple comparisons can benefit from statistical tests (see, e.g., Ledoit and Wolf (2008)).

More extreme risk measures can serve as denominator in risk-adjusted indicators. The Managed Account Report (MAR) ratio is, for example, computed as

while the Treynor ratio is equal to

i.e., the (excess) return divided by the market beta (see Treynor (1965)). This definition was generalized to multifactor expositions by Hübner (2005) into the generalized Treynor ratio:

where the $\bar{f}_k$ are the sample average of the factors $f_{t,k}$. We refer to the original article for a detailed account of the analytical properties of this ratio.

Updating portfolio composition is not free. In all generality, the total cost of one rebalancing at time $t$ is proportional to $C_t=\sum_{n=1}^N | \Delta w_{t,n}|c_{t,n}$, where $\Delta w_{t,n}$ is the change in position for asset $n$ and $c_{t,n}$ the corresponding fee. This last quantity is often hard to predict, thus it is customary to use a proxy that depends for instance on market capitalization (large stocks have more liquid shares and thus require smaller fees) or bid-ask spreads (smaller spreads mean smaller fees).

As a first order approximation, it is often useful to compute the average turnover:

where $w_{t,n}$ are the desired $t$-time weights in the portfolio and $w_{t-,n}$ are the weights just before the rebalancing. The positions of the first period (launching weights) are exluded from the computation by convention. Transaction costs can then be proxied as a multiple of turnover (times some average or median cost in the cross-section of firms). This is a first order estimate of realized costs that does not take into consideration the evolution of the scale of the portfolio. Nonetheless, a rough figure is much better than none at all.

Once transaction costs (TCs) have been annualized, they can be deducted from average returns to yield a more realistic picture of profitability. In the same vein, the transaction cost-adjusted Sharpe ratio of a portfolio $P$ is given by

Transaction costs are often overlooked in academic articles but can have a sizable impact in real life trading (see, e.g., Novy-Marx and Velikov (2015)). DeMiguel et al. (2020) show how to use factor investing (and exposures) to combine and offset positions and reduce overall fees.

One of the most common mistakes in portfolio backtesting is the use of forward looking data. It is for instance easy to fall in the trap of the danger zone depicted in Figure 12.2. In this case, the labels used at time $t$ are computed with knowledge of what happens at times $t+1$, $t+2$, etc. It is worth triple checking every step in the code to make sure that strategies are not built on prescient data.

The second major problem is backtest overfitting. The analogy with training set overfitting is easy to grasp. It is a well-known issue and was formalized for instance in White (2000) and Romano and Wolf (2005). In portfolio choice, we refer to Bajgrowicz and Scaillet (2012), Bailey and Prado (2014) and Lopez de Prado and Bailey (2020), and the references therein.

At any given moment, a backtest depends on only one particular dataset. Often, the result of the first backtest will not be satisfactory - for many possible reasons. Hence, it is tempting to have another try, when altering some parameters that were probably not optimal. This second test may be better, but not quite good enough - yet. Thus, in a third trial, a new weighting scheme can be tested, along with a new forecasting engine (more sophisticated). Iteratively, the backtester can only end up with a strategy that performs well enough, it is just a matter of time and trials.

One consequence of backtest overfitting is that it is illusory to hope for the same Sharpe ratios in live trading as those obtained in the backtest. Reasonable professionals divide the Sharpe ratio by two at least (Harvey and Liu (2015), Suhonen, Lennkh, and Perez (2017)). In Bailey and Prado (2014), the authors even propose a statistical test for Sharpe ratios, provided that some metrics of all tested strategies are stored in memory. The formula for deflated Sharpe ratios is:

where $SR$ is the Sharpe Ratio obtained by the best strategy among all that were tested, and

is the theoretical average maximum SR. Moreover,

If $t$ defined above is below a certain threshold (e.g., 0.95), then the $SR$ cannot be deemed significant: compared to all of those that were tested. Most of the time, sadly, that is the case. In Equation (12.5), the realized SR must be above the theoretical maximum $SR^∗$ and the scaling factor must be sufficiently large to push the argument inside $\phi$ close enough to two, so that $t$ surpasses 0.95.

In the scientific community, test overfitting is also known as p-hacking. It is rather common in financial economics and the reading of Harvey (2017) is strongly advised to grasp the magnitude of the phenomenon. p-hacking is also present in most fields that use statistical tests (see, e.g., Head et al. (2015) to cite but one reference). There are several ways to cope with p-hacking:

The first option is wise, but the drawback is that the decision process is then left to another arbitrary yardstick

As is mentioned at the beginning of the chapter, two common sense references for backtesting are Fabozzi and Prado (2018) and R. Arnott, Harvey, and Markowitz (2019). The pieces of advice provided in these two articles are often judicious and thoughtful.

One additional comment pertains to the output of the backtest. One simple, intuitive and widespread metric is the transaction cost-adjusted Sharpe ratio defined in Equation (12.4). In the backtest, let us call $SR_{TC}^B$ the corresponding value for the benchmark, which we like to define as the equally-weighted portfolio of all assets in the trading universe (in our dataset, roughly one thousand US equities). If the $SR_{TC}^P$ of the best strategy is above $2\times SR_{TC}^B$, then there is probably a glitch somewhere in the backtest.

This criterion holds under two assumptions:

It is unlikely that any realistic strategy can outperform a solid benchmark by a very wide margin over the long term. Being able to improve the benchmark’s annualized return by 150 basis points (with comparable volatility) is already a great achievement. Backtests that deliver returns more than 5% above those of the benchmark are dubious.

This subsection is split into two parts: in the first, we discuss the reason that makes forecasting such a difficult task and in the second we present an important theoretical result originally developed towards machine learning but that sheds light on any discipline confronted with out-of-sample tests. An interesting contribution related to this topic is the study from Farmer, Schmidt, and Timmermann (2019). The authors assess the predictive fit of linear models through time: they show that the fit is strongly varying: sometimes the model performs very well, sometimes, not so much. There is no reason why this should not be the case for ML algorithms as well.

The careful reader must have noticed that throughout Chapters 5 to 11, the performance of ML engines is underwhelming. These disappointing results are there on purpose and highlight the crucial truth that machine learning is no panacea, no magic wand, no philosopher’s stone that can transform data into golden predictions. Most ML-based forecasts fail. This is in fact not only true for very enhanced and sophisticated techniques, but also for simpler econometric approaches (Dichtl et al. (2020)), which again underlines the need to replicate results to challenge their validity.

One reason for that is that datasets are full of noise and extracting the slightest amount of signal is a tough challenge (we recommend a careful reading of the introduction of Timmermann (2018) for more details on this topic). One rationale for that is the ever time-varying nature of factor analysis in the equity space. Some factors can perform very well during one year and then poorly the next year and these reversals can be costly in the context of fully automated data-based allocation processes.

In fact, this is one major difference with many fields for which ML has made huge advances. In image recognition, numbers will always have the same shape, and so will cats, buses, etc. Likewise, a verb will always be a verb and syntaxes in languages do not change. This invariance, though sometimes hard to grasp,25 is nonetheless key to the great improvement both in computer vision and natural language processing.

In factor investing, there does not seem to be such invariance (see Cornell (2020)). There is no factor and no (possibly nonlinear) combination of factors that can explain and accurately forecast returns over long periods of several decades.26 The academic literature has yet to find such a model; but even if it did, a simple arbitrage reasoning would logically invalidate its conclusions in future datasets.

We start by underlying that the no free lunch theorem in machine learning has nothing to do with the asset pricing condition with the same name (see, e.g., Delbaen and Schachermayer (1994), or, more recently, Cuchiero, Klein, and Teichmann (2016)). The original formulation was given by Wolpert (1992a) but we also recommend a look at the more recent reference Ho and Pepyne (2002). There are in fact several theorems and two of them can be found in Wolpert and Macready (1997).

The statement of the theorem is very abstract and requires some notational conventions. We assume that any training sample $S=(\{\textbf{x}_1,y_1\}, \dots, \{\textbf{x}_I,y_I\})$ is such that there exists an oracle function $f$ that perfectly maps the features to the labels: $y_i=f(\textbf{x}_i)$. The oracle function $f$ belongs to a very large set of functions $F$. In addition, we write $\mathcal{H}$ for the set of functions to which the forecaster will resort to approximate $\mathcal{F}$. For instance, $\mathcal{H}$ can be the space of feed-forward neural networks, or the space of decision trees, or the reunion of both. Elements of $\mathcal{H}$ are written $h$ and $\mathbb{P}[h|S]$ stands for the (largely unknown) distribution of h knowing the sample $S$. Similarly, $\mathbb{P}[f|S]$ is the distribution of oracle function knowing $S$. Finally, the features have a given law, $\mathbb{P}[\textbf{x}]$.

Let us now consider two models, say $h_1$ and $h_2$. The statement of the theorem is usually formulated with respect to a classification task. Knowing $S$, the error when choosing $h_k$ induced by samples outside of the training sample $S$ can be quantified

where $\delta(\cdot,\cdot)$ is the delta Kronecker function:

One of the no free lunch theorems states that $E_1(S)=E_2(S)$ , that is, that with the sole knowledge of $S$, there can be no superior algorithm, on average. In order to build a performing algorithm, the analyst or econometrician must have prior views on the structure of the relationship between $y$ and $\textbf{x}$x and integrate these views in the construction of the model. Unfortunately, this can also yield underperforming models if the views are incorrect.

We finally propose a full detailed example of one implementation of a ML-based strategy run on a careful backtest. What follows is a generalization of the content of Section 5.2.2. In the same spirit, we split the backtest in four parts:

Accordingly, we start with initializations.

import xgboost as xgb

import datetime as dt

from datetime import datetime

sep_oos= "2007-01-01" # Starting point for backtest

ticks= list(data_ml['stock_id'].unique()) # List of all asset ids

N= len(ticks) # Max number of assets

t_oos= list(returns.index[returns.index>sep_oos].values) # Out-of-sample dates

t_as= list(returns.index.values) # Out-of-sample dates

Tt= len(t_oos) # Nb of dates

nb_port = 2 # Nb of portfolios/strategies

portf_weights= np.zeros(shape=(Tt, nb_port, max(ticks)+1)) # Initialize portfolio weights

portf_returns= np.zeros(shape=(Tt, nb_port)) # Initialize portfolio returns

This first step is crucial, it lays the groundwork for the core of the backtest. We consider only two strategies: one ML-based and the EW (1/N) benchmark. The main (weighting) function will consist of these two components, but we define the sophisticated one in a dedicated wrapper. The ML-based weights are derived from XGBoost predictions with 80 trees, a learning rate of 0.3 and a maximum tree depth of 4. This makes the model complex but not exceedingly so. Once the predictions are obtained, the weighting scheme is simple: it is an EW portfolio over the best half of the stocks (those with above median prediction).

In the function below, all parameters (e.g., the learning rate, eta or the number of trees nrounds) are hard-coded. They can easily be passed in arguments next to the data inputs. One very important detail is that in contrast to the rest of the book, the label is the 12-month future return. The main reason for this is rooted in the discussion from Section 4.6. Also, to speed up the computations, we remove the bulk of the distribution of the labels and keep only the top 20% and bottom 20%, as is advised in Coqueret and Guida (2020). The filtering levels could also be passed as arguments.

def weights_xgb(train_data, test_data, features):

train_features= train_data[features] # Indep. variable

train_label= train_data['R12M_Usd']/ np.exp(train_data['Vol1Y_Usd']) # Dep. variable ##T##

ind = (train_label < np.quantile(train_label, 0.2))|(train_label > np.quantile(train_label, 0.8)) # Filter

train_features= train_features.loc[ind] # Filtered features

train_label= train_label.loc[ind] # Filtered label

train_matrix=xgb.DMatrix(train_features, label=train_label) # XGB format!

params={'eta' : 0.3, # Learning rate

'objective' : "reg:squarederror", # Objective function

'max_depth' : 4} # Maximum depth of trees

fit_xgb =xgb.train(params, train_matrix,num_boost_round=80) # Number of trees used

test_features=test_data[features] # Test sample => XGB format

test_matrix=xgb.DMatrix(test_features) # XGB format!

pred = fit_xgb.predict(test_matrix) # Single prediction

w_names=test_data["stock_id"] # Stocks' list

w = pred > np.median(pred) # Keep only the 50% best predictions

w = w / np.sum(w) # Best predictions, equally-weighted

return w, w_names

Compared to the structure proposed in Section 6.4.6, the differences are that the label is not only based on long-term returns, but it also relies on a volatility component. Even though the denominator in the label is the exponential quantile of the volatility, it seems fair to say that it is inspired by the Sharpe ratio and that the model seeks to explain and forecast a risk-adjusted return instead of a raw return. A stock with very low volatility will have its return unchanged in the label, while a stock with very high volatility will see its return divided by a factor close to three (exp(1)=2.718).

This function is then embedded in the global weighting function which only wraps two schemes: the EW benchmark and the ML-based policy.

def portf_compo(train_data, test_data, features, j):

if j == 0: # This is the benchmark

N = len(test_data["stock_id"]) # Test data dictates allocation

w = np.repeat(1/N,N) # EW portfolio

w_names=test_data["stock_id"] # Asset names

return w, w_names

elif j == 1: # This is the ML strategy.

return weights_xgb(train_data, test_data, features)

Equipped with this function, we can turn to the main backtesting loop. Given the fact that we use a large-scale model, the computation time for the loop is large (possibly a few hours on a slow machine with CPU). Resorting to functional programming can speed up the loop (see exercise at the end of the chapter). Also, a simple benchmark equally weighted portfolio can be coded with tidyverse functions only.

m_offset = 12 # Offset in months for buffer period(label)

train_size = 5 # Size of training set in years

for t in range(len(t_oos)-1): # Stop before last date: no fwd ret.!

print(t_oos[t]) # Just checking the date status

ind= (data_ml['date'] < datetime.strftime(datetime.strptime(t_oos[t], "%Y-%m-%d")-dt.timedelta(m_offset*30), "%Y-%m-%d")) & (

data_ml['date'] > datetime.strftime(datetime.strptime(t_oos[t], "%Y-%m-%d")-dt.timedelta(m_offset*30)-dt.timedelta(365 * train_size), "%Y-%m-%d"))

train_data= data_ml.loc[ind,:] # Train sample

test_data= data_ml.loc[data_ml['date'] == t_oos[t],:] # Test sample

realized_returns= test_data["R1M_Usd"] # Computing returns via: 1M holding period!

for j in range(nb_port):

temp_weights, stocks = portf_compo(train_data, test_data, features, j) # Weights

portf_weights[t,j,stocks] = temp_weights # Allocate weights

portf_returns[t,j] = np.sum(temp_weights * realized_returns) # Compute returns

There are two important comments to be made on the above code. The first comment pertains to the two parameters that are defined in the first lines. They refer to the size of the training sample (5 years) and the length of the buffer period shown in Figure 12.2. This buffer period is imperative because the label is based on a long-term (12-month) return. This lag is compulsory to avoid any forward-looking bias in the backtest.

Below, we create a function that computes the turnover (variation in weights). It requires both the weight values as well as the returns of all assets because the weights just before a rebalancing depend on the weights assigned in the previous period, as well as on the returns of the assets that have altered these original weights during the holding period.

def turnover(weights, asset_returns, t_oos):

turn = 0

for t in range(1, len(t_oos)):

realised_returns = asset_returns[returns.index == t_oos[t]].values

prior_weights = weights[t-1] * (1+realised_returns) # Before rebalancing

turn =turn + np.sum(np.abs(weights[t] - prior_weights/np.sum(prior_weights)))

return turn/(len(t_oos)-1)

Once turnover is defined, we embed it into a function that computes several key indicators.

def perf_met(portf_returns, weights, asset_returns, t_oos):

avg_ret = np.nanmean(portf_returns) # Arithmetic mean

vol = np.nanstd(portf_returns, ddof=1) # Volatility

Sharpe_ratio = avg_ret / vol # Sharpe ratio

VaR_5 = np.quantile(portf_returns, 0.05) # Value-at-risk

turn = turnover(weights, asset_returns, t_oos) # using the turnover function

met = [avg_ret, vol, Sharpe_ratio, VaR_5, turn] # Aggregation of all of this

return met

Lastly, we build a function that loops on the various strategies.

def perf_met_multi(portf_returns, weights, asset_returns, t_oos, strat_name):

J = weights.shape[1] # Number of strategies

met = [] # Initialization of metrics

for j in range(J): # One slighlty less ugly loop

temp_met = perf_met(portf_returns[:,j], weights[:,j,:], asset_returns, t_oos)

met.append(temp_met)

return pd.DataFrame(met, index=strat_name, columns = ['avg_ret', 'vol', 'Sharpe_ratio', 'VaR_5', 'turn']) # Stores the name of the strat

Given the weights and returns of the portfolios, it remains to compute the returns of the assets to plug them in the aggregate metrics function.

asset_returns = data_ml[['date', 'stock_id', 'R1M_Usd']].pivot(index='date', columns='stock_id',values='R1M_Usd')

na = list(set(np.arange(max(asset_returns.columns)+1)).difference(set(asset_returns.columns))) # find the missing stock_id

asset_returns[na]=0 # Adding into asset return dataframe

asset_returns = asset_returns.loc[:,sorted(asset_returns.columns)]

asset_returns.fillna(0, inplace=True) # Zero returns for missing points

perf_met_multi(portf_returns, portf_weights, asset_returns, t_oos,strat_name = ["EW", "XGB_SR"])

| avg_ret | vol | Sharpe_ratio | VaR_5 | turn | |

|---|---|---|---|---|---|

| EW | 0.009697 | 0.056429 | 0.171848 | -0.077125 | 0.071451 |

| XGB_SR | 0.012603 | 0.063768 | 0.197635 | -0.083359 | 0.567993 |

The tree creates clusters which have homogeneous values of absolute errors. OneThe ML-based strategy performs finally well! The gain is mostly obtained by the average return, while the volatility is higher than that of the benchmark. The net effect is that the Sharpe ratio is improved compared to the benchmark. The augmentation is not breathtaking, but (hence?) it seems reasonable. It is noteworthy to underline that turnover is substantially higher for the sophisticated strategy. Removing costs in the numerator (say, 0.005 times the turnover, as in Goto and Xu (2015), which is a conservative figure) only mildly reduces the superiority in Sharpe ratio of the ML-based strategy.

Finally, it is always tempting to plot the corresponding portfolio values and we display two related graphs in Figure 12.3.

g1 = pd.DataFrame([t_oos, np.cumprod(1+portf_returns[:,0]), np.cumprod(1+portf_returns[:,1])],index = ["date","benchmark","ml_based"]).T # Creating cumulated timeseries

g1.reset_index(inplace=True) # Data wrangling

g1['date_month']=pd.to_datetime(g1['date']).dt.month # Creating a new column to select dataframe partition for secong plot (yearly performance)

g1.set_index('date',inplace=True) # Setting date index for plots

g2=g1[g1['date_month']==12] # Selecting pseudo end of year NAV

g2=g2.append(g1.iloc[[0]]) # Adding the first date of Jan 2007

g2.sort_index(inplace=True) # Sorting dates

g1[["benchmark","ml_based"]].plot(figsize=[16,6],ylabel='Cumulated dollar value') # plot evidently!

g2[["benchmark","ml_based"]].pct_change(1).plot.bar(figsize=[16,6],ylabel='Yearly performance') # plot evidently!

<AxesSubplot:xlabel='date', ylabel='Yearly performance'>

FIGURE 12.3: Graphical representation of the performance of the portfolios.

Out of the 12 years of the backtest, the advanced strategy outperforms the benchmark during 10 years. It is less hurtful in two of the four years of aggregate losses (2015 and 2018). This is a satisfactory improvement because the EW benchmark is tough to beat!

To end this chapter, we quantify the concepts of Section 12.4.2. First, we build a function that is able to generate performance metrics for simple strategies that can be evaluated in batches. The strategies are pure factor bets and depend on three inputs: the chosen characteristic (e.g., market capitalization), a threshold level (quantile of the characteristic) and a direction (long position in the top or bottom of the distribution).

def strat(data, feature, thresh, direction):

data_tmp = data[[feature, 'date', 'R1M_Usd']].copy() # Data for individual feature

data_tmp['decision'] = direction*data_tmp[feature] > direction*thresh # Investment decision as a Boolean

data_tmp = data_tmp.groupby('date').apply( # Date-by-date analysis

lambda x: np.sum(x['decision']/np.sum(x['decision']) * x['R1M_Usd'])) # Asset contribution, weight * return

avg = np.nanmean(data_tmp) # Portfolio average return

sd = np.nanstd(data_tmp, ddof=1) # Portfolio volatility non annualised

SR = avg / sd # Portfolio sharpe ratio

return np.around([avg, sd, SR],4)

Then, we test the function on a triplet of arguments. We pick the price-to-book (Pb) ratio. The position is positive and the threshold is 0.3, which means that the strategy buys the stocks that have a Pb value above the 0.3 quantile of the distribution.

strat(data_ml, "Pb", 0.3, 1) # Large cap

array([0.0102, 0.0496, 0.2065])

The output keeps three quantities that will be useful to compute the statistic (12.5). We must now generate these indicators for many strategies. We start by creating the grid of parameters.

import itertools

feature = ["Div_Yld", "Ebit_Bv", "Mkt_Cap_6M_Usd", "Mom_11M_Usd", "Pb", "Vol1Y_Usd"]

thresh = np.arange(0.2, 0.9, 0.1) # Threshold

direction = np.array([1,-1]) # Decision direction

This makes 84 strategies in total. We can proceed to see how they fare. We plot the corresponding Sharpe ratios below in Figure 12.4. The top plot shows the strategies that invest in the bottoms of the distributions of characteristics while the bottom plot pertains to the portfolios that are long in the lower parts of these distributions.

grd = [] # Empty placeholder, parameters for the grid search

for f, t, d in itertools.product(feature,thresh,direction): # Parameters for the grid search

strat_data=[] # Empty placeholder, dataframe for the function

strat_data=pd.DataFrame(strat(data_ml,f,t,d)).T # Function on which to apply the grid search

strat_data.rename(columns={0: 'avg', 1: 'sd',2:'SR'}, inplace=True) # Change columns names

strat_data[['feature', 'thresh', 'direction']]=f, t, d # Feeding parameters to construc the dataframe

grd.append(strat_data) # Appending/inserting

grd = pd.concat(grd)[['feature','thresh','direction','avg', 'sd', 'SR']] # Putting all together and reordering columns

grd[grd['direction']==-1].pivot(index='thresh',columns='feature',values='SR').plot(figsize=[16,6],ylabel='Direction = -1') # Plot!

grd[grd['direction']==1].pivot(index='thresh',columns='feature',values='SR').plot(figsize=[16,6],ylabel='Direction = 1') # Plot!

<AxesSubplot:xlabel='thresh', ylabel='Direction = 1'>

FIGURE 12.4: Sharpe ratios of all backtested strategies.

The last step is to compute the statistic (12.5). We code it here:

from scipy import special as special

from scipy import stats as stats

def DSR(SR, Tt, M, g3, g4, SR_m, SR_v): # First, we build the function

gamma = -special.digamma(1) # Euler-Mascheroni constant

SR_star = SR_m + np.sqrt(SR_v)*((1-gamma)*stats.norm.ppf(1-1/M) + gamma*stats.norm.ppf(1-1/M/np.exp(1))) # SR*

num = (SR-SR_star) * np.sqrt(Tt-1) # Numerator

den = np.sqrt(1 - g3*SR + (g4-1)/4*SR**2) # Denominator

return round(stats.norm.cdf(num/den),4)

All that remains to do is to evaluate the arguments of the function. The “best” strategy is the one on the top left corner of Figure 12.4 and it is based on market capitalization.

M = grd.shape[0] # Number of strategies we tested

SR = np.max(grd['SR']) # The SR we want to test

SR_m = np.mean(grd['SR']) # Average SR across all strategies

SR_v = np.var(grd['SR'], ddof=1) # Std dev of SR

data_tmp = data_ml[['Mkt_Cap_6M_Usd', 'date', 'R1M_Usd']].copy() # feature = Mkt_Cap

data_tmp.rename({'Mkt_Cap_6M_Usd':'feature'}, axis=1, inplace=True)

data_tmp['decision'] = data_tmp['feature'] < 0.2 # Investment decision: 0.2 is the best threshold

returns_DSR = data_tmp.groupby('date').apply( # Date-by-date analysis

lambda x:np.sum(x['decision']/np.sum(x['decision']) * x['R1M_Usd'])) # Asset contribution, weight * return

g3 = stats.skew(returns_DSR) # Function/method from Scipy.stats

g4 = stats.kurtosis(returns_DSR, fisher=False) # Function/method from Scipy.stats

Tt = returns_DSR.shape[0] # Number of dates

DSR(SR, Tt, M, g3, g4, SR_m, SR_v) # The sought value!

0.6657

The value 0.6657 is not high enough (it does not reach the 90% or 95% threshold) to make the strategy significantly superior to the other ones that were considered in the batch of tests.

Build an integrated ensemble on top of 3 neural networks trained entirely with Keras. Each network obtains one third of predictors as input. The three networks yield a classification (yes/no or buy/sell). The overarching network aggregates the three outputs into a final decision. Evaluate its performance on the testing sample. Use the functional API.

Amrhein, Valentin, Sander Greenland, and Blake McShane. 2019. “Scientists Rise up Against Statistical Significance.” Nature 567: 305–7.

Arnott, Rob, Campbell R Harvey, Vitali Kalesnik, and Juhani Linnainmaa. 2019. “Alice’s Adventures in Factorland: Three Blunders That Plague Factor Investing.” Journal of Portfolio Management 45 (4): 18–36.

Arnott, Rob, Campbell R Harvey, and Harry Markowitz. 2019. “A Backtesting Protocol in the Era of Machine Learning.” Journal of Financial Data Science 1 (1): 64–74.

Bailey, David H, and Marcos López de Prado. 2014. “The Deflated Sharpe Ratio: Correcting for Selection Bias, Backtest Overfitting, and Non-Normality.” Journal of Portfolio Management 40 (5): 39–59.

Bajgrowicz, Pierre, and Olivier Scaillet. 2012. “Technical Trading Revisited: False Discoveries, Persistence Tests, and Transaction Costs.” Journal of Financial Economics 106 (3): 473–91.

Bodnar, Taras, Nestor Parolya, and Wolfgang Schmid. 2013. “On the Equivalence of Quadratic Optimization Problems Commonly Used in Portfolio Theory.” European Journal of Operational Research 229 (3): 637–44.

Carhart, Mark M. 1997. “On Persistence in Mutual Fund Performance.” Journal of Finance 52 (1): 57–82.

Coqueret, Guillaume. 2015. “Diversified Minimum-Variance Portfolios.” Annals of Finance 11 (2): 221–41.

Coqueret, Guillaume, and Tony Guida. 2020. “Training Trees on Tails with Applications to Portfolio Choice.” Annals of Operations Research 288: 181–221.

Cornell, Bradford. 2020. “Stock Characteristics and Stock Returns: A Skeptic’s Look at the Cross Section of Expected Returns.” Journal of Portfolio Management.

Cuchiero, Christa, Irene Klein, and Josef Teichmann. 2016. “A New Perspective on the Fundamental Theorem of Asset Pricing for Large Financial Markets.” Theory of Probability & Its Applications 60 (4): 561–79.

Delbaen, Freddy, and Walter Schachermayer. 1994. “A General Version of the Fundamental Theorem of Asset Pricing.” Mathematische Annalen 300 (1): 463–520.

DeMiguel, Victor, Lorenzo Garlappi, and Raman Uppal. 2009. “Optimal Versus Naive Diversification: How Inefficient Is the 1/N Portfolio Strategy?” Review of Financial Studies 22 (5): 1915–53.

DeMiguel, Victor, Alberto Martin Utrera, Raman Uppal, and Francisco J Nogales. 2020. “A Transaction-Cost Perspective on the Multitude of Firm Characteristics.” Review of Financial Studies 33 (5): 2180–2222.

Dichtl, Hubert, Wolfgang Drobetz, Andreas Neuhierl, and Viktoria-Sophie Wendt. 2020. “Data Snooping in Equity Premium Prediction.” Journal of Forecasting Forthcoming.

Dichtl, Hubert, Wolfgang Drobetz, and Viktoria-Sophie Wendt. 2020. “How to Build a Factor Portfolio: Does the Allocation Strategy Matter?” European Financial Management Forthcoming.

Elliott, Graham, Nikolay Kudrin, and Kaspar Wuthrich. 2019. “Detecting P-Hacking.” arXiv Preprint, no. 1906.06711.

Fabozzi, Frank J, and Marcos López de Prado. 2018. “Being Honest in Backtest Reporting: A Template for Disclosing Multiple Tests.” Journal of Portfolio Management 45 (1): 141–47.

Fama, Eugene F, and Kenneth R French. 1993. “Common Risk Factors in the Returns on Stocks and Bonds.” Journal of Financial Economics 33 (1): 3–56.

Farmer, Leland, Lawrence Schmidt, and Allan Timmermann. 2019. “Pockets of Predictability.” SSRN Working Paper 3152386.

Goto, Shingo, and Yan Xu. 2015. “Improving Mean Variance Optimization Through Sparse Hedging Restrictions.” Journal of Financial and Quantitative Analysis 50 (6): 1415–41.

Gu, Shihao, Bryan T Kelly, and Dacheng Xiu. 2020b. “Empirical Asset Pricing via Machine Learning.” Review of Financial Studies 33 (5): 2223–73.

Harvey, Campbell R. 2017. “Presidential Address: The Scientific Outlook in Financial Economics.” Journal of Finance 72 (4): 1399–1440.

Harvey, Campbell R, John C Liechty, Merrill W Liechty, and Peter Müller. 2010. “Portfolio Selection with Higher Moments.” Quantitative Finance 10 (5): 469–85.

Harvey, Campbell R, and Yan Liu. 2015. “Backtesting.” Journal of Portfolio Management 42 (1): 13–28.

Head, Megan L, Luke Holman, Rob Lanfear, Andrew T Kahn, and Michael D Jennions. 2015. “The Extent and Consequences of P-Hacking in Science.” PLoS Biology 13 (3): e1002106.

Ho, Yu-Chi, and David L Pepyne. 2002. “Simple Explanation of the No-Free-Lunch Theorem and Its Implications.” Journal of Optimization Theory and Applications 115 (3): 549–70.

Hübner, Georges. 2005. “The Generalized Treynor Ratio.” Review of Finance 9 (3): 415–35.

Jensen, Michael C. 1968. “The Performance of Mutual Funds in the Period 1945–1964.” Journal of Finance 23 (2): 389–416.

Jorion, Philippe. 1985. “International Portfolio Diversification with Estimation Risk.” Journal of Business, 259–78.

Kan, Raymond, and Guofu Zhou. 2007. “Optimal Portfolio Choice with Parameter Uncertainty.” Journal of Financial and Quantitative Analysis 42 (3): 621–56.

Kim, Woo Chang, Jang Ho Kim, and Frank J Fabozzi. 2014. “Deciphering Robust Portfolios.” Journal of Banking & Finance 45: 1–8.

Lai, Tze Leung, Haipeng Xing, Zehao Chen, and others. 2011. “Mean–Variance Portfolio Optimization When Means and Covariances Are Unknown.” Annals of Applied Statistics 5 (2A): 798–823.

Ledoit, Oliver, and Michael Wolf. 2008. “Robust Performance Hypothesis Testing with the Sharpe Ratio.” Journal of Empirical Finance 15 (5): 850–59.

Lopez de Prado, Marcos, and David H Bailey. 2020. “The False Strategy Theorem: A Financial Application of Experimental Mathematics.” American Mathematical Monthly Forthcoming.

Maillard, Sébastien, Thierry Roncalli, and Jérôme Teiletche. 2010. “The Properties of Equally Weighted Risk Contribution Portfolios.” Journal of Portfolio Management 36 (4): 60–70.

Maillet, Bertrand, Sessi Tokpavi, and Benoit Vaucher. 2015. “Global Minimum Variance Portfolio Optimisation Under Some Model Risk: A Robust Regression-Based Approach.” European Journal of Operational Research 244 (1): 289–99.

Markowitz, Harry. 1952. “Portfolio Selection.” Journal of Finance 7 (1): 77–91.

Novy-Marx, Robert, and Mihail Velikov. 2015. “A Taxonomy of Anomalies and Their Trading Costs.” Review of Financial Studies 29 (1): 104–47.

Pedersen, Lasse Heje, Abhilash Babu, and Ari Levine. 2020. “Enhanced Portfolio Optimization.” SSRN Working Paper 3530390.

Pflug, Georg Ch, Alois Pichler, and David Wozabal. 2012. “The 1/N Investment Strategy Is Optimal Under High Model Ambiguity.” Journal of Banking & Finance 36 (2): 410–17.

Plyakha, Yuliya, Raman Uppal, and Grigory Vilkov. 2016. “Equal or Value Weighting? Implications for Asset-Pricing Tests.” SSRN Working Paper 1787045.

Romano, Joseph P, and Michael Wolf. 2005. “Stepwise Multiple Testing as Formalized Data Snooping.” Econometrica 73 (4): 1237–82.

Romano, Joseph P, and Michael Wolf. 2013. “Testing for Monotonicity in Expected Asset Returns.” Journal of Empirical Finance 23: 93–116.

Sharpe, William F. 1966. “Mutual Fund Performance.” Journal of Business 39 (1): 119–38.

Simonsohn, Uri, Leif D Nelson, and Joseph P Simmons. 2014. “P-Curve: A Key to the File-Drawer.” Journal of Experimental Psychology: General 143 (2): 534.

Snow, Derek. 2020. “Machine Learning in Asset Management: Part 2: Portfolio Construction—Weight Optimization.” Journal of Financial Data Science Forthcoming.

Suhonen, Antti, Matthias Lennkh, and Fabrice Perez. 2017. “Quantifying Backtest Overfitting in Alternative Beta Strategies.” Journal of Portfolio Management 43 (2): 90–104.

Timmermann, Allan. 2018. “Forecasting Methods in Finance.” Annual Review of Financial Economics 10: 449–79.

Treynor, Jack L. 1965. “How to Rate Management of Investment Funds.” Harvard Business Review 43 (1): 63–75.

White, Halbert. 2000. “A Reality Check for Data Snooping.” Econometrica 68 (5): 1097–1126.

Wolpert, David H. 1992a. “On the Connection Between in-Sample Testing and Generalization Error.” Complex Systems 6 (1): 47.

Wolpert, David H, and William G Macready. 1997. “No Free Lunch Theorems for Optimization.” IEEE Transactions on Evolutionary Computation 1 (1): 67–82.

Constraints often have beneficial effects on portfolio composition, see Jagannathan and Ma (2003) and DeMiguel et al. (2009).↩︎

A long position in an asset with positive return or a short position in an asset with negative return.↩︎

We invite the reader to have a look at the thoughtful albeit theoretical paper by Arjovsky et al. (2019).↩︎

In the thread https://twitter.com/fchollet/status/1177633367472259072, François Chollet, the creator of Keras argues that ML predictions based on price data cannot be profitable in the long term. Given the wide access to financial data, it is likely that the statement holds for predictions stemming from factor-related data as well.↩︎